Back at its re:Invent conference in November, AWS announced its $249 DeepLens, a camera that’s specifically geared toward developers who want to build and prototype vision-centric machine learning models. The company started taking pre-orders for DeepLens a few months ago, but now the camera is actually shipping to developers.

Ahead of today’s launch, I had a chance to attend a workshop in Seattle with DeepLens senior product manager Jyothi Nookula and Amazon’s VP for AI Swami Sivasubramanian to get some hands-on time with the hardware and the software services that make it tick.

DeepLens is essentially a small Ubuntu- and Intel Atom-based computer with a built-in camera that’s powerful enough to easily run and evaluate visual machine learning models. In total, DeepLens offers about 106 GFLOPS of performance.

The hardware has all of the usual I/O ports (think Micro HDMI, USB 2.0, Audio out, etc.) to let you create prototype applications, no matter whether those are simple toy apps that send you an alert when the camera detects a bear in your backyard or an industrial application that keeps an eye on a conveyor belt in your factory. The 4 megapixel camera isn’t going to win any prizes, but it’s perfectly adequate for most use cases. Unsurprisingly, DeepLens is deeply integrated with the rest of AWS’s services. Those include the AWS IoT service Greengrass, which you use to deploy models to DeepLens, for example, but also SageMaker, Amazon’s newest tool for building machine learning models.

These integrations are also what makes getting started with the camera pretty easy. Indeed, if all you want to do is run one of the pre-built samples that AWS provides, it shouldn’t take you more than 10 minutes to set up your DeepLens and deploy one of these models to the camera. Those project templates include an object detection model that can distinguish between 20 objects (though it had some issues with toy dogs, as you can see in the image above), a style transfer example to render the camera image in the style of van Gogh, a face detection model and a model that can distinguish between cats and dogs and one that can recognize about 30 different actions (like playing guitar, for example). The DeepLens team is also adding a model for tracking head poses. Oh, and there’s also a hot dog detection model.

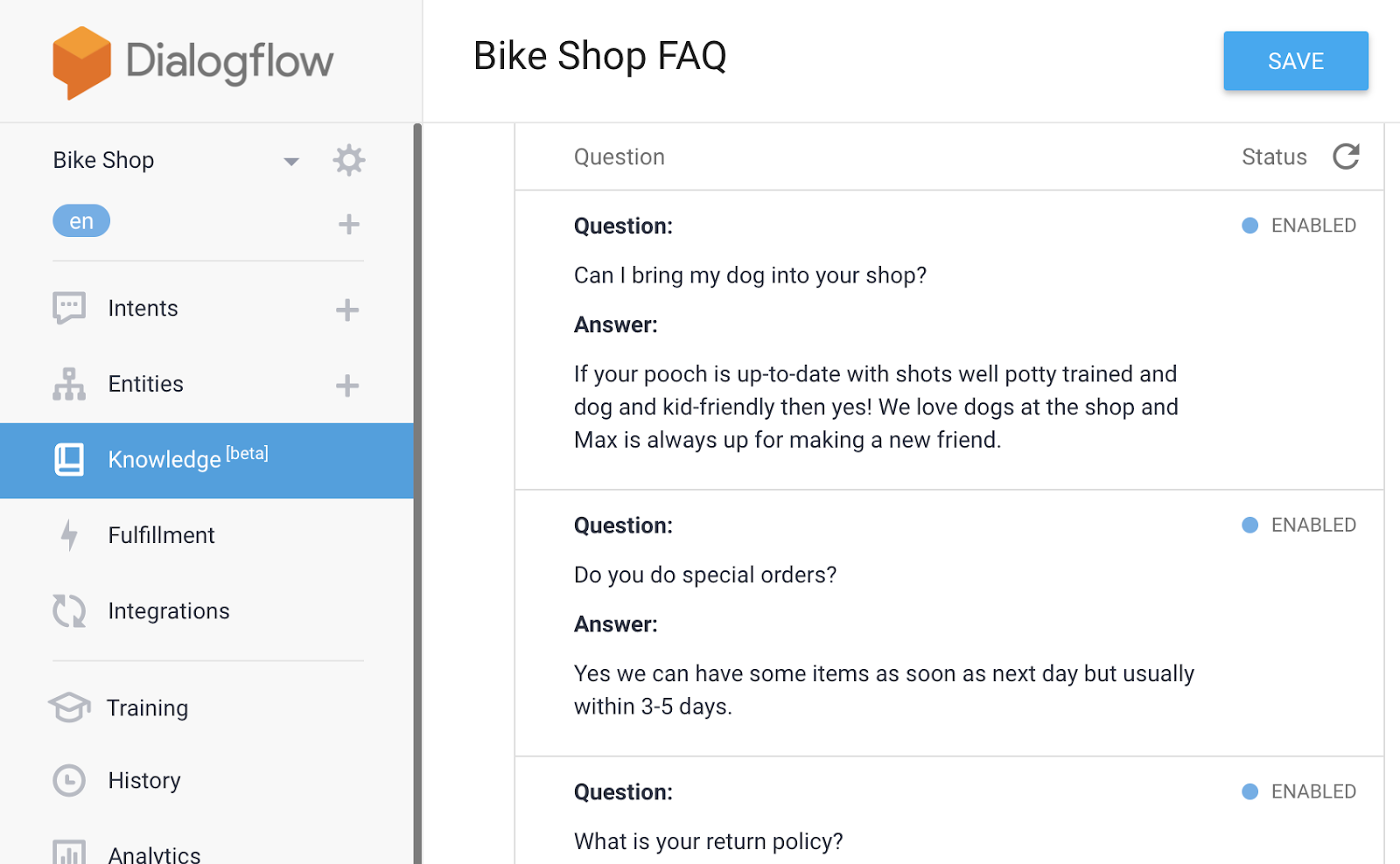

But that’s obviously just the beginning. As the DeepLens team stressed during our workshop, even developers who have never worked with machine learning can take the existing templates and easily extend them. In part, that’s due to the fact that a DeepLens project consists of two parts: the model and a Lambda function that runs instances of the model and lets you perform actions based on the model’s output. And with SageMaker, AWS now offers a tool that also makes it easy to build models without having to manage the underlying infrastructure.

But that’s obviously just the beginning. As the DeepLens team stressed during our workshop, even developers who have never worked with machine learning can take the existing templates and easily extend them. In part, that’s due to the fact that a DeepLens project consists of two parts: the model and a Lambda function that runs instances of the model and lets you perform actions based on the model’s output. And with SageMaker, AWS now offers a tool that also makes it easy to build models without having to manage the underlying infrastructure.

You could do a lot of the development on the DeepLens hardware itself, given that it is essentially a small computer, though you’re probably better off using a more powerful machine and then deploying to DeepLens using the AWS Console. If you really wanted to, you could use DeepLens as a low-powered desktop machine as it comes with Ubuntu 16.04 pre-installed.

For developers who know their way around machine learning frameworks, DeepLens makes it easy to import models from virtually all the popular tools, including Caffe, TensorFlow, MXNet and others. It’s worth noting that the AWS team also built a model optimizer for MXNet models that allows them to run more efficiently on the DeepLens device.

So why did AWS build DeepLens? “The whole rationale behind DeepLens came from a simple question that we asked ourselves: How do we put machine learning in the hands of every developer,” Sivasubramanian said. “To that end, we brainstormed a number of ideas and the most promising idea was actually that developers love to build solutions as hands-on fashion on devices.” And why did AWS decide to build its own hardware instead of simply working with a partner? “We had a specific customer experience in mind and wanted to make sure that the end-to-end experience is really easy,” he said. “So instead of telling somebody to go download this toolkit and then go buy this toolkit from Amazon and then wire all of these together. […] So you have to do like 20 different things, which typically takes two or three days and then you have to put the entire infrastructure together. It takes too long for somebody who’s excited about learning deep learning and building something fun.”

So if you want to get started with deep learning and build some hands-on projects, DeepLens is now available on Amazon. At $249, it’s not cheap, but if you are already using AWS — and maybe even use Lambda already — it’s probably the easiest way to get started with building these kind of machine learning-powered applications.

Citroën vient en aide aux personnes qui sont malades en voiture et lancent les lunettes Seetroën qui, en plus d'offrir un magnifique jeu de mots, permettent d'éliminer le mal des transports.

Citroën vient en aide aux personnes qui sont malades en voiture et lancent les lunettes Seetroën qui, en plus d'offrir un magnifique jeu de mots, permettent d'éliminer le mal des transports.

But that’s obviously just the beginning. As the DeepLens team stressed during our workshop, even developers who have never worked with machine learning can take the existing templates and easily extend them. In part, that’s due to the fact that a DeepLens project consists of two parts: the model and a Lambda function that runs instances of the model and lets you perform actions based on the model’s output. And with SageMaker, AWS now offers a tool that also makes it easy to build models without having to manage the underlying infrastructure.

But that’s obviously just the beginning. As the DeepLens team stressed during our workshop, even developers who have never worked with machine learning can take the existing templates and easily extend them. In part, that’s due to the fact that a DeepLens project consists of two parts: the model and a Lambda function that runs instances of the model and lets you perform actions based on the model’s output. And with SageMaker, AWS now offers a tool that also makes it easy to build models without having to manage the underlying infrastructure.