Shared posts

New in NotebookLM: Customizing your Audio Overviews

New in NotebookLM: Customizing your Audio Overviews

The most requested feature for Google's NotebookLM "audio overviews" (aka automatically generated podcast conversations) has been the ability to provide direction to those artificial podcast hosts - setting their expertise level or asking them to focus on specific topics.Today's update adds exactly that:

Now you can provide instructions before you generate a "Deep Dive" Audio Overview. For example, you can focus on specific topics or adjust the expertise level to suit your audience. Think of it like slipping the AI hosts a quick note right before they go on the air, which will change how they cover your material.

I pasted in a link to my post about video scraping and prompted it like this:

You are both pelicans who work as data journalist at a pelican news service. Discuss this from the perspective of pelican data journalists, being sure to inject as many pelican related anecdotes as possible

Here's the resulting 7m40s MP3, and the transcript.

It starts off strong!

You ever find yourself wading through mountains of data trying to pluck out the juicy bits? It's like hunting for a single shrimp in a whole kelp forest, am I right?

Then later:

Think of those facial recognition systems they have for humans. We could have something similar for our finned friends. Although, gotta say, the ethical implications of that kind of tech are a whole other kettle of fish. We pelicans gotta use these tools responsibly and be transparent about it.

And when brainstorming some potential use-cases:

Imagine a pelican citizen journalist being able to analyze footage of a local council meeting, you know, really hold those pelicans in power accountable, or a pelican historian using video scraping to analyze old film reels, uncovering lost details about our pelican ancestors.

Plus this delightful conclusion:

The future of data journalism is looking brighter than a school of silversides reflecting the morning sun. Until next time, keep those wings spread, those eyes sharp, and those minds open. There's a whole ocean of data out there just waiting to be explored.

And yes, people on Reddit have got them to swear.

Tags: notebooklm, data-journalism, google, llms, ai, generative-ai, gemini

What is a "cognitive architecture"?

Update: Several readers have pointed out that the term "cognitive architecture" has a rich history in neuroscience and computational cognitive science. Per Wikipedia, "a cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory". That definition (and corresponding research and articles on the topic) are more comprehensive than any definition I attempt to offer here, and this blog should instead be read as a mapping of my experience building and helping build LLM-powered applications over the past year to this area of research.

One phrase I’ve used a lot over the past six months (and will likely use more) is “cognitive architecture”. It’s a term I first heard from Flo Crivello - all credit for coming up with it goes to him, and I think it's a fantastic term. So what exactly do I mean by this?

What I mean by cognitive architecture is how your system thinks — in other words, the flow of code/prompts/LLM calls that takes user input and performs actions or generates a response.

I like the word “cognitive” because agentic systems rely on using an LLM to reason about what to do.

I like the word “architecture” because these agentic systems still involve a good amount of engineering similar to traditional system architecture.

Mapping levels of autonomy to cognitive architectures

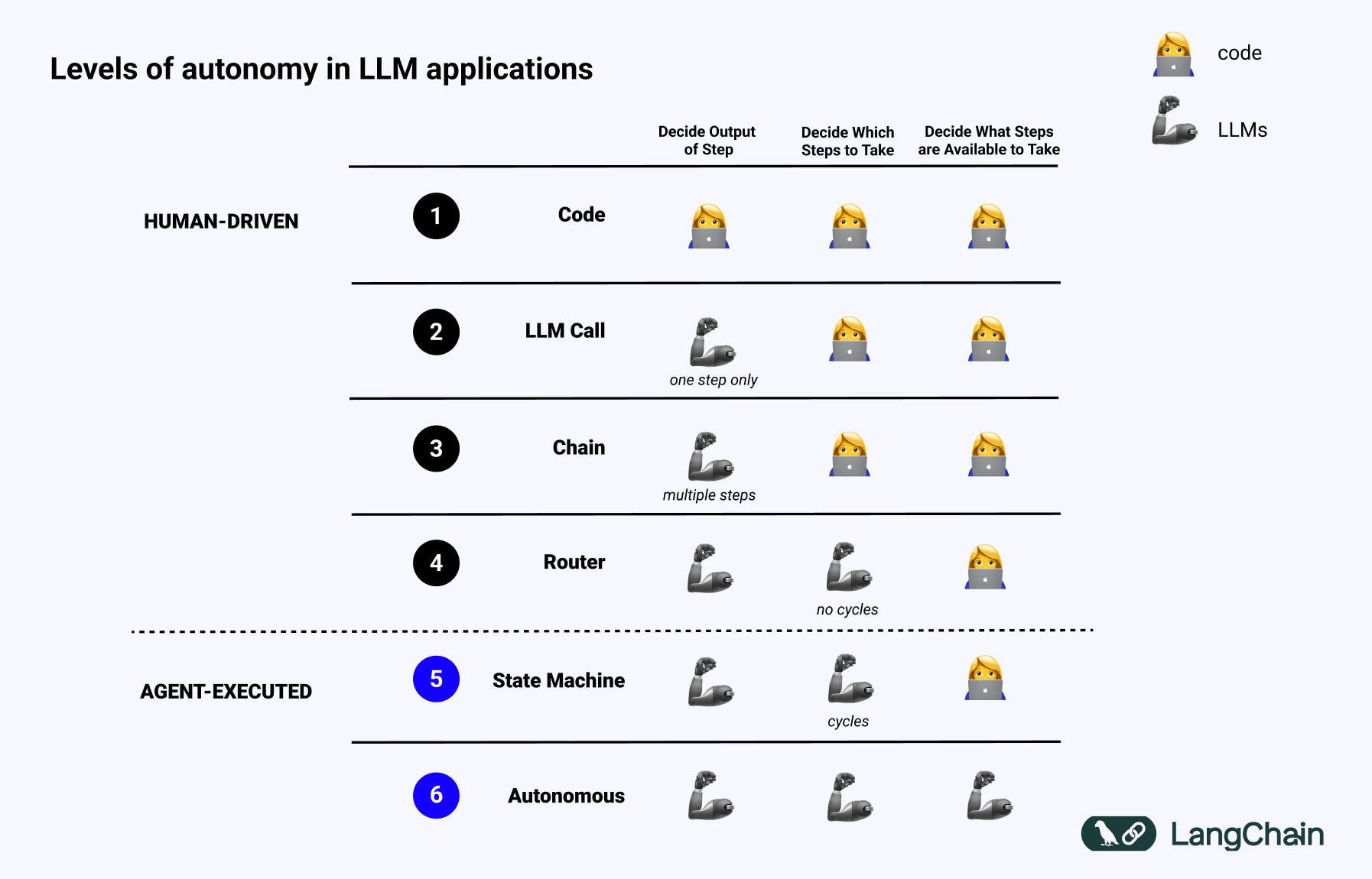

If we refer back to this slide (originally from my TED Talk) on the different levels of autonomy in LLM applications, we can see examples of different cognitive architectures.

First is just code - everything is hard coded. Not even really a cognitive architecture.

Next is just a single LLM call. Some data preprocessing before and/or after, but a single LLM call makes up the majority of the application. Simple chatbots likely fall into this category.

Next is a chain of LLM calls. This sequence can be either breaking the problem down into different steps, or just serve different purposes. More complex RAG pipelines fall into this category: use a first LLM call to generate a search query, then a second LLM call to generate an answer.

After that, a router. Prior to this, you knew all the steps the application would take ahead of time. Now, you no longer do. The LLM decides which actions to take. This adds in a bit more randomness and unpredictability.

The next level is what I call a state machine. This is combining an LLM doing some routing with a loop. This is even more unpredictable, as by combining the router with a loop, the system could (in theory) invoke an unlimited number of LLM calls.

The final level of autonomy is the level I call an agent, or really an “autonomous agent”. With state machines, there are still constraints on which actions can be taken and what flows are executed after that action is taken. With autonomous agents, those guardrails are removed. The system itself starts to decide which steps are available to take and what the instructions are: this can be done by updating the prompts, tools, or code used to power the system.

Choosing a cognitive architecture

When I talk about "choosing a cognitive architecture,” I mean choosing which of these architectures you want to adopt. None of these are strictly “better” than others - they all have their own purpose for different tasks.

When building LLM applications, you’ll probably want to experiment with different cognitive architectures just as frequently as you experiment with prompts. We’re building LangChain and LangGraph to enable that. Most of our development efforts over the past year have gone into building low-level, highly controllable orchestration frameworks (LCEL and LangGraph).

This is a bit of a departure from early LangChain which focused on easy-to-use, off-the-shelf chains. These were great for getting started but tough to customize and experiment with. This was fine early on, as everyone was just trying to get started, but as the space matured, the design pretty quickly hit its limits.

I’m extremely proud of the changes we’ve made over the past year to make LangChain and LangGraph more flexible and customizable. If you’ve only ever used LangChain through the high level wrappers, check out the low-level bits. They are much more customizable, and will really let you control the cognitive architecture of your application.

If you’re building straight-forward chains and retrieval flows, check out LangChain in Python and JavaScript. For more complex agentic workflows, try out LangGraph in Python and JavaScript.

Wikipedia Edit War Update

A few months ago I stumbled into an edit war on Wikipedia. I noticed that Wikipedia's page on Jacy Reese was being, essentially, guarded from having any mention that he previously went by his full name. There was a pattern where someone would notice this information was missing, add it, and then it would be reverted soon after.

The main user guarding the page was Bodole, and someone pointed me yesterday to where they've been banned from editing Jacy's page for three months. The discussion there was another interesting window into how Wikipedia handles disputes, so after reading it I thought it would be interesting to review:

User Drmies edited the page to remove a list of articles Jacy had published ("rm linkfarm. we list books, not articles", link). Drmies is an experienced editor, making a routine cleanup.

User Bodole reverts the change ("Many BLP list articles. Please discuss on talk page if you think this should be an exception.", link).

Drmies reverts the revert ("It's the other way around. what you are doing is promoting this person by linking a set of articles. if you have secondary sources that prove these articles are worth noticing, that's a different matter", link)

Bodole reverts the reversion of the revert ("You are edit warring. Please stop. Discuss on the talk page if you insist. See the WP:BRD cycle", link) and puts a warning (link) on Drimes' talk page.

Drimes responds there with "Aw boohoo" (link)

Drmies reverts the reversion of the reversion of the revert ("see talk page", link) and marks the page as being subject to Wikipedia:Conflict of Interest (link). It looks to me like Drmies thinks Bodole may either be Jacy or someone closely connected with him. Drmies removes biographical information from the page ("this 'Sentience Institute' has an article--why this biography is bloated with content about some poll, verified only with links to websites, is not clear", link)

Discussion moves to the talk page

Drmies is clearly quite unhappy with apparent promotional editing ("we are not here to produce link dumps for resume-style lists of publications", "The article itself is way too fluffy anyway; it used to be a lot worse, thanks in part to edits like this one by the creator, Utsill, and this one, by Reckston. A quick look at the references show a plethora of primary links and references to non-notable outfits", "The talk page, and the edit history, indicate that a number of editors have tried to bring some order to this madness, and I thank 78.26, Melcous, Kbog, and especially AlasdairEdits for their efforts".

Bodole files a complaint on Administrators' noticeboard/Incidents ("Disruptive editing by User:Drmies", link)

The complaint does not go well for Bodole. It's interesting reading, but generally the administrators think Drmies behavior is reasonable and Bodole's is not. They bring up that Bodole tried to remove the discussion of whether the page should contain "Anthis" from the talk page, that Bodole may be a (not allowed) alias of Utsil who created the page, and that "Boodole appears to be a [single-purpose account], perhaps one who is here to [right great wrongs]. Of their 228 edits, it appears that the vast majority of them concern Jacy Reese/Jacy Reese Anthis in some way". The consensus is to temporarily ban Bodole from editing the 'Jacy Reese Anthis' page.

Bodole responds by ragequitting ("I will now sign off of Wikipedia indefinitely").

Wikipedia volunteers aren't really in a position to investigate conflicts of interest, but it does make me wonder who Bodole is and, if they're not connected with Jacy, why they would be so invested in this one article.

Comment via: facebook

A Chunk by Any Other Name: Structured Text Splitting and Metadata-enhanced RAG

Editor's note: this is a guest entry by Martin Zirulnik, who recently contributed the HTML Header Text Splitter to LangChain. For more of Martin's writing on generative AI, visit his blog.

chunking-blogVESPA: Static profiling for binary optimization

What the research is:

Recent research has demonstrated that binary optimization is important for achieving peak performance for various applications. For instance, the state-of-the-art BOLT binary optimizer developed at Meta, which is part of the LLVM Compiler Project, significantly improves the performance of highly optimized binaries produced using compilers’ most aggressive optimizations, such as profile-guided and link-time optimizations.

In this research, we propose a novel approach to apply binary optimization without the need to profile the application. Our technique, called Vintage ESP Amended (VESPA), builds on top of a previous technique called evidence-based static prediction (ESP), which applies machine learning techniques to statically infer the direction of branch instructions in a program.

VESPA expands on ESP in several ways to make it useful in the context of binary optimizers. VESPA increases the scope where binary optimizers can be used, thus enhancing the range of applications that can leverage these tools to improve their performance. Our work also enables higher performance and better user experience for many software applications that were out of the reach of binary optimizers, such as end-user mobile applications.

How it works:

VESPA is useful for obtaining profile information to feed binary optimizers like BOLT statically, i.e., with no need to execute the target application to produce profile data. To achieve this, VESPA employs machine learning techniques. First, during a training phase, VESPA is provided with a set of applications and corresponding dynamic profiles. Using these, VESPA trains a neural network model that learns the probability that branch instructions in the programs will be taken based on various program characteristics (e.g., the condition code of the branch or whether the target block is a loop header).

After this model is produced, it can be used to infer the probability that branches from other programs will be taken. VESPA then transforms these probabilities into code frequencies, or estimates of how often each individual piece of the program will execute, similar to the information that a binary optimizer normally requires from dynamic profiles obtained by executing an application. Once the static profile data produced by VESPA is injected into a binary optimizer, this tool can proceed with its optimization steps as usual, completely oblivious to how the profile data was computed. VESPA, therefore, can very easily be integrated into existing binary optimizers, which we demonstrated by integrating it into Meta’s BOLT binary optimizer.

Compared to the seminal ESP technique that inspired our work, VESPA provides three main improvements:

- An enhanced neural-network model

- New program features to improve the model’s accuracy

- A technique to derive code frequencies required for binary optimizations instead of simply branch directions

Why it matters:

BOLT can provide performance speedups of about 20 percent not only for many of Meta’s widely deployed server workloads, but also for other widely used open source applications such as compilers (e.g., GCC and Clang) and database systems (e.g., MySQL and PostgreSQL). To achieve these results, BOLT relies on very accurate dynamic profile data collected from executing the target applications on representative inputs. Unfortunately, collecting these profile data adds complexity and overheads to applications’ build processes, and sometimes it is not even possible — for example, in the case of mobile applications executing on user devices.

Using VESPA to derive static profiles for the BOLT binary optimizer, our work demonstrates that a 6 percent speedup can be achieved on top of highly optimized binaries built with Clang -O3 without the need for dynamic profiling the application. As such, our research demonstrates that binary optimizations can be beneficial even in scenarios where dynamic profiling is prohibitive or impossible, thus opening new opportunities for binary optimizers, such as end-user mobile applications.

Read the paper:

VESPA: Static profiling for binary optimization

The post VESPA: Static profiling for binary optimization appeared first on Engineering at Meta.

Things that used to be hard and are now easy

Hello! I was talking to some friends the other day about the types of conference talks we enjoyed.

One category we came up with was “you know this thing that used to be super hard? Turns out now it’s WAY EASIER and maybe you can do it now!“.

So I asked on Twitter about programming things that used to be hard and are now easy

Here are some of the answers I got. Not all of them are equally “easy”, but I found reading the list really fun and it gave me some ideas for things to learn. Maybe it’ll give you some ideas too.

- SSL certificates, with Let’s Encrypt

- Concurrency, with async/await (in several languages)

- Centering in CSS, with flexbox/grid

- Building fast programs, with Go

- Image recognition, with transfer learning (someone pointed out that the joke in this XKCD doesn’t make sense anymore)

- Building cross-platform GUIs, with Electron

- VPNs, with Wireguard

- Running your own code inside the Linux kernel, with eBPF

- Cross-compilation (Go and Rust ship with cross-compilation support out of the box)

- Configuring cloud infrastructure, with Terraform

- Setting up a dev environment, with Docker

- Sharing memory safely with threads, with Rust

Things that involve hosted services:

- CI/CD, with GitHub Actions/CircleCI/GitLab etc

- Making useful websites by only writing frontend code, with a variety of “serverless” backend services

- Training neural networks, with Colab

- Deploying a website to a server, with Netlify/Heroku etc

- Running a database, with hosted services like RDS

- Realtime web applications, with Firebase

- Image recognition, with hosted ML services like Teachable Machine

Things that I haven’t done myself but that sound cool:

- Cryptography, with opinionated crypto primitives like libsodium

- Live updates to web pages pushed by the web server, with LiveView/Hotwire

- Embedded programming, with MicroPython

- Building videogames, with Roblox / Unity

- Writing code that runs on GPU in the browser (maybe with Unity?)

- Building IDE tooling with LSP (the language server protocol)

- Interactive theorem provers (not sure with what)

- NLP, with HuggingFace

- Parsing, with PEG or parser combinator libraries

- ESP microcontrollers

- Batch data processing, with Spark

Language specific things people mentioned:

- Rust, with non-lexical lifetimes

- IE support for CSS/JS

what else?

I’d love more examples of things that have become easier over the years.