Here are notes and links from my talk at Southern California Linux Expo. This is not exactly what I said but the links should be right.

Introduction: limits of individual protection

I need to start with a disclaimer. Privacy tools will not get you privacy, but it's important to do them anyway.

-

Most privacy tools and settings make you different from others, so more fingerprintable (hi, Linux nerds)

-

Out of band surveillance, which privacy tools can't touch, is still there (and the more you participate in the modern economy, the more you're surveilled)

-

companies fail to comply with existing laws (yes, they complain

that they're "confusing" and somehow that seems to work in

a lot of cases.) And they play legal tricks to build up and use friendly jurisdictions.

-

the more you try to protect yourself, the more crappy ads you get. How long can you stand getting the miracle cure ointment ad featuring the "before" picture—before you give up and try to get tracked so you get more legit ads?

By itself, no set of privacy tools is going to be able to get you to an acceptable level of

privacy. If that's what you came for, you can split, go get a coffee or something.

Privacy tools are worth doing, but only as part of a cooperative program to address a much

larger set of problems.

Am I here to argue the privacy point of view or the pro-business point of view? Yes.

First of all, surveillance advertising competes directly with product innovation for investments.

The economics literature on

the effects of shifting investment away from surveillance is going to be interesting. In the meantime, we have evidence from

mobile games and mobile game advertising, which is a fast-moving behavioral economics lab that helps spot trends early.

As mobile game developers lose much of their targeting info to Apple's App Tracking Transparency, they compensate by investing

more in content and gameplay.

Surveillance also puts us at greater risk from internal strife and external adversaries.

Bob Hoffman told the European Parliament, Tracking is also a national security threat. The Congress of the United States has asked U.S. intelligence agencies to study how information gleaned from online data collection may be used by hostile foreign governments.

(More on that: Microtargeting as Information Warfare by Prof. Jessica Dawson of the Army Cyber Institute)

All right, all right, fine, you came for open source privacy tools advice so I will include some. We'll do some fun tips, but I will try to put them in context. Here's number one.

Privacy tip: fix YouTube

This one is an easy example of a tracking risk. The video you came to watch is great, but the stuff that gets

suggested after that is the first step down the rat hole. A case before the Supreme Court right now covers YouTube running ISIS recruiting videos. And one of their biggest stars was just arrested in Romania for human trafficking.

The recommendation algorithm on there will take you to some pretty dark places, pretty fast. But there are also videos on there you have to watch for school, or work.

What I want is a YouTube setup that will not just protect my privacy, but also reduce the number of YouTube impressions I generate. (Also I want to visit my family members at college or at a legit job, not at terrorist training camp.)

This requires two browser extensions. First, use the Multi-Account Containers

extension to put YouTube in a separate browser container. That's a separate space with its own logins, storage, and cookies.

Click the extension, select "Manage Containers" and make a new container for YouTube. Then go to YouTube and choose "always open

this site in container."

Second, use Enhancer for YouTube to turn off the problematic features.

Don't use the native mobile app for YouTube.

There are also alternate front ends for YouTube and other

services. LibRedirect is an

extension to automatically redirect to those services. But they aren't as future-proof, because it's easier for

YouTube to break them.

Privacy violations as part of a larger problem

Privacy is downstream from other problems.

Surveillance capitalism isn't a thing because advertisers care about your personal data.

If you do a "Right to Know" most of what you get is wrong. According to Oracle, I am a soccer mom of four.

I hate to break it to you, but those startups that tell you that

you can make money selling your personal info to advertisers are

wrong. Your data has value as a short-term intermediate reaction

product, as an input to two kinds of deceptive practices.

- First of all, the B2C scams: ad targeting lets the legit advertisers show their ads to the affluent users who can afford stuff.

And they typically target younger people. Targeting generally cuts off at age 34 or 54.

So what do social media platforms do with all the ad slots that the legit advertisers don't buy, and that go to older and poorer people?

Right, they get the not so legit ads.

- Second is the B2B scam: tricking advertisers into sponsoring content that they would choose to avoid, such as Russian disinformation and copyright infringement. User tracking lets ad intermediaries sell an ad impression that claims to reach a high-value user, but on content that no reasonable advertiser would choose to support.

Hey, look, it's a real car ad on a scraper site.

How can such a large industry get away with this kind of conduct for so long? Professor Tressie McMillan Cottom wrote,

From buying a gallon of milk to making a dinner reservation, all the way up the chain to electoral malfeasance, so many of our interactions feel weighted away from social connection in favor of extracting every ounce of unfair advantage from every single human activity. Not to overstate it, but a pluralistic democracy simply cannot function when most of its citizens cannot trust that the arrangements that they rely on to meet their basic needs are roughly fair.

To put the privacy problem in context, privacy violations

as we see them are downstream from changes in business norms.

We're pulling on one tentacle of a much bigger threat than just

the obvious personal privacy issues such as swatting, identity theft, price

discrimination, and investment scams.

The relative payoffs of production and predation (or “making” versus “taking”) are determined by legal mechanisms for enforcing contracts and protecting property rights, but also by social norms and interpersonal trust. — Stephen Knack

The more common that scams become in our markets and society, the more trust we burn, the more unnecessary risk we take on, and the more wealth

we miss out on. This is not an advertising

problem, as Bob Hoffman said—it's a surveillance problem.

Some kinds of advertising can have economic signaling value that does help build trust, but only

if the medium is set up for it. (Fixing digital ads

to pull their weight and fulfill the signaling role of advertising is a hard problem,

and I should bug Rory Sutherland some more about how to solve it.)

Privacy tip: mobile apps

Native apps can track you in ways that web sites on a well-behaved

browser can't. In-app browsers inject JavaScript. Apps contain

tracking SDKs. Privacy filters are limited.

Banning TikTok is a start, but all apps containing the TikTok SDK are TikTok, as far as harvesting your info goes. And other apps and/or SDKs could be sharing your

info with other people you don't want it shared with. There are a few ways to deal with surveillance apps.

-

Delete your account

-

Switch to using the service on the web

-

Limit your use of the app (For example, make a habit of checking Signal before you check surveillance apps, so people learn that it’s a better way to reach you.)

Remove all the surveillance apps from your phone that you can. This doesn't necessarily

mean cut off people who you can only reach on a surveillance app.

Social connections mean you live longer, so apps required to communicate with friends and family

are worth spending some of your personal "privacy risk budget" on. In general I accept some risks on

social connections and collaborating, but I try to be stricter when it comes to shopping and entertainment.

Good news: privacy is on the way up

Right now we're in the middle of some positive privacy trends: more effective enforcement of existing privacy laws and regulations,

more interest in new ones, and some software improvements that make some kinds of

tracking harder. Here in California, some recent news includes

-

the Sephora case, which makes it clear that common surveillance marketing practices are sales

under the CCPA

-

the Attorney General's office has been clarifying rules on Global Privacy Control, and doing a

sweep

of law-breaking apps

-

CPPA, the new privacy agency, is starting up

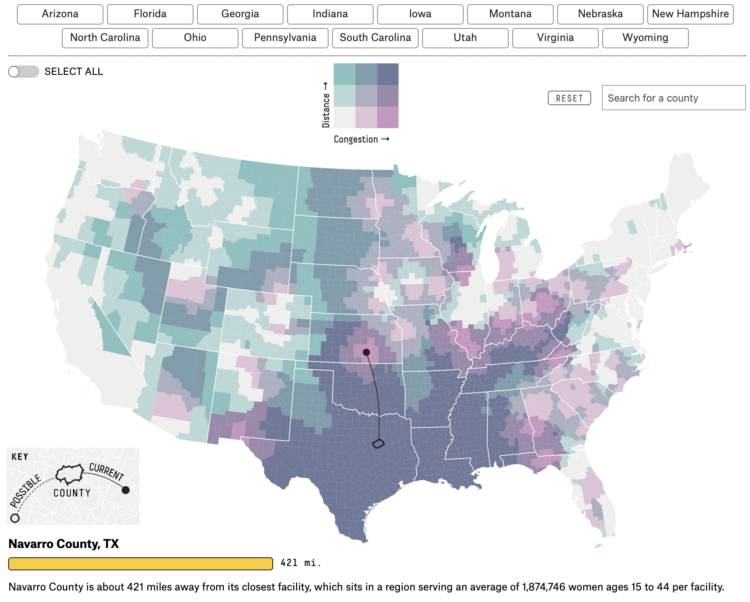

More states are probably going to get privacy laws this year, so those outside California will be able to join in.

More states are probably going to get privacy laws this year, so those outside California will be able to join in.

We have some advantages if we sound like we know what we're doing.

Surveillance and the attitudes underlying it are not changing right away, but you can shift things if you approach each action with

something easy for the other end to do, and act like you know what you're doing. They're going to balance the time required to act on your

privacy mail against the risk of not acting on it, and if the risk looks big enough, easier to do the right thing.

Enough of those and we shift the relative expected payouts of surveillance and non-surveillance investments. (Like RCRA got many companies

to just cut down on hazmat instead of dealing with the required record-keeping.)

Privacy tip: mobile phone settings

Check your phone settings. On Apple iOS there are two settings for surveillance ads: one for most companies' ads, and one for Apple's own ads. Don't forget to check both. (Yes, you probably have to scroll down for the second one. Well played, Apple.)

-

In Settings, go to Privacy & Security, then Tracking, and make sure Allow Apps to Request to Track

is turned off.

-

Also in Settings under Privacy & Security, find Apple Advertising

and make sure that Personalized Ads

is turned off.

On Android, you can open Settings, go to Privacy, then Ads and select Delete advertising ID.

More info: How to Disable Ad ID Tracking on iOS and Android, and Why You Should Do It Now | Electronic Frontier Foundation

If you get these settings right, your mobile app ads will get really crappy, really fast, but you're limiting mobile time and maximizing web time, right?

General principle: trying to herd the money away from the worst places.

We said we would try to retrain the surveillance business.

Address the worst practices first. Doing the easy stuff first can create

the wrong incentives. That's why I put YouTube first here. Then level up your

privacy skills and toolset.

Level 1: mix of effective and ineffective privacy practices and tools

Level 2: effective privacy practices and tools

Level 3: effective practices and tools applied in an effective way

Remember you are not going to be able to get individual protection that's meaningful while still participating in society.

It's more about driving transformation.

Privacy tip: Google Chrome

This is a big year for Google Chrome.

-

Manifest v3: coming next year, will limit the ability of content blocking extensions to block dynamically. On the Google Chrome browser, ad blockers, along with tracking protection tools that work in a similar way to ad blockers, will soon be limited in what they can do. If your chosen privacy tools and settings are not going to be supported, you might have to switch browsers. Browser compatibility has gotten a lot better recently, so if you switched because a site you like was broken on your old browser, please check it again. (This may or may not affect other Chromium-based browsers.)

-

Privacy Sandbox: a variety of projects, including a mix of some actual privacy features, one big on-browser ad auction

(an ambitious project) and some anti-competitive shenanigans. It looks like 2023 will be the summer of double ad JavaScript on Google Chrome: you'll still have the old cookie-based stuff, but you'll also have an experimental ad auction running inside the browser.

Because Google both competes as an adtech intermediary and releases a browser,

antitrust concerns mean they have to try to make the browser fair to all the other adtech intermediary

companies they compete with. This is now the subject of an ongoing investigation by the Competition and Markets Authority in the UK.

James Rosewell, CEO and founder of a mobile data company called 51Degrees, started a long, complicated process.

If you do decide to keep Google Chrome, there is a bunch

of brouhaha about the impending

end of third-party

cookies, but you can turn them off today without breaking

much, if anything. (Sites already have to support browsers

that don't do third-party cookies.) From the Ad Contrarian

newsletter:

- Open the Chrome browser. Click the three dot thing in the upper right corner.

- Click "Settings"

- In the left column click "Privacy and security"

- Click "Cookies and other site data"

- Click "Block third-party cookies"

Google Chrome also has new in-browser advertising features,

confusingly lumped together as Privacy Sandbox.

Check

chrome://settings/privacySandbox.

The text in this settings screen is not especially helpful. Google's "Topics API" is a general-purpose system to categorize users based on sites you visit.

There's nothing about it that's limited to ads. Will probably be more useful for price discrimination, and worse, helps

incentivize deceptive practices to drive web traffic.

(Looks like that message is in open-source Chromium, so filed an issue. Anyway, if you have Google Chrome, turn this off.

Update 17 Jul 2023: If you have a web site, or if you administer desktop systems with

Google Chrome on them, you can do a few more steps to protect others: Google Chrome ad features checklist

The attribution tracking chain

This is an oversimplified chart, but it's a good start for

learning about the "attribution tracking" chain and how to break it.

If you break the link between surveillance data on what you buy and surveillance data on what ads you saw,

then it's harder to justify investments in surveillance advertising— remember, we're trying to move money

from surveillance to other investments here.

That's the main reason why you have YouTube on a browser container that is never used for anything else. It interferes with the attribution link

between a video view and a sale.

Privacy tip: remember to vote

California has the CPRA because people

voted for Proposition 24 in 2020. The CPRA isn't perfect, but voting

made a difference. While you're voting, please don't eliminate a candidate from

consideration just because they're using the big surveillance

platforms. They're hard to avoid completely.

In today's environment it's generally better to make a little

progress than to achieve privacy purity but lose the actual election.

Breaking the chain: where?

Once you have a mental model of the attribution chain you can allocate

your time most effectively.

-

Software tools are usually lower effort but shorter range: they can protect an activity like a web session but can't reach out to affect server to server communications.

-

Legal tools can reach out further, but are higher effort. And companies don't always comply.

Different surveillance threats can be addressed at different levels. In general, earlier and more automated is easier,

but legal tools have a longer reach, since they can touch systems that you don't have a network connection to, and that

automated tools can't see.

Here's a list of places to make a difference, from earliest to latest.

-

Don’t do a trackable activity (example: delete a surveillance app, don’t visit a surveilled location)

-

Don’t send tracking info (example: block tracking scripts)

-

Send tracking info that is hard to link to your real info (examples: use an auto-generated email address system like Firefox Relay,

or churn tracking cookies with Cookie AutoDelete)

-

Deny consent or send an opt out when doing a tracked activity: Global Privacy Control

-

Object, exercise your right to delete, or opt out later, after data has been collected but before you are targeted (CCPA Authorized Agents, RtD automation tools like Mine)

Privacy tip: CCPA script

Delete your info from the largest surveillance firms. Here's a partial list. Later on we'll

cover how to make a personalized list based on who has info on you.

I do these pretty quickly with Mutt and shell.

ccpa privacy@example.com

The script is here: CCPA opt out, nerd edition

The sample letters, as templates, are in this privacy-docs Git repository.

Teaching the escalation path

If you're protected from a company by automated tooling, you can mostly relax. (This

applies to most of the companies that can only track you by an identifier stored in a third-party

cookie. Turn off third-party cookies and you're fine.)

If they accept a GPC, almost as good. Remember that GPC applies to all uses of your information, not just the current session.

Manual opt outs are where things start to get time-consuming.

Most opt outs are either not compliant, or take advantage of

loopholes in the regs to make you do extra work.

If they give you any grief on an opt out, and make opt-out as hard as a "right to know" (Rtk), then go ahead and escalate to an RtK. According to one study, a manual RtK can cost a company $1400 to handle.

Then if they give you grief on your RtK, you can file a complaint with the AG.

Be patient. Right now there are way more companies that have your personal

info than companies that have a qualified CCPA manager. Be patient, and

remember, any individual request might

end quickly or turn into a long thread. The object is to change

the expected payoffs for investments in surveillance relative to other investments.

Privacy tip: keep your Facebook account

Why does it matter if a company is sending my info to Facebook if I never log in to Facebook (or related sites?)

Just as the lowest point on a toxic waste site is generally a good place for a sampling well to check the progress of

remediation, Facebook is a good place to sample for your personal info. It's a low point to which most of the firms who have your

info will eventually share it.

Remember, get a Facebook account, but don't install the mobile app.

-

click on your face

-

select Settings and Privacy from the menu, then Settings

-

Select "Ads" on the left column

-

Under "Ad Preferences" select Ad Settings

(We're getting close...)

- Select "Audience-based advertising"

- Now scroll down and select "see all businesses"

You're probably going to get a lot of these. Let's have a look at a few.

First is a store where I actually bought something. Facebook's

biggest advertiser at one point.

Then a DTC retailer that I've never heard of. Who sold them a list of "hot prospects?" I remember when this list used to be all car dealers.

Then two surveillance marketing companies—they can get a Right to Delete.

Finally another retailer, wait a minute, I haven't bought from this

one either. The closest I have come to this one is the year that

they had the LinuxWorld conference and the Talbots managers meeting

at the same convention center in Boston. Remember, surveillance

marketing and scam culture are two overlapping scenes—you

can often see when a legit company has been sold a customer list

that isn't. (maybe the scammer who got the car dealers has moved

on to retailers?)

Of course, while you're there, building a list of who to send opt outs to, don't buy anything from the ads.

Privacy tip: Browser checkup

Remove extensions you aren't sure about. A lot of spyware and adware gets through.

Run the EFF checkup tool, "Cover your Tracks". Sad but true: the more custom your Linux setup is, the more fingerprintable you are. Technical protections won't cover you, browser protection needs to combine the technical and the legal.

Turn on Global Privacy Control. This will automate your

California do not sell

for sites you visit. Still

not supported everywhere, but will have more effect as

more companies come into compliance and more jurisdictions

require companies to support GPC.

Content blocking. This next one is a tricky subject, so I'm not going to say anything about it. I'll just quote the FBI's

public service announcement, Cyber Criminals Impersonating Brands Using Search Engine Advertisement Services to Defraud Users.

It all comes back to scam culture. Be warned when looking for an ad blocker

on browser extension directories. A lot of them are

spyware or malware.

Some privacy extensions I use include:

-

ClearURLs to remove tracking parameters from URLs, and

often speed up browsing by skipping a redirect that's just there for tracking.

-

Cookie AutoDelete.

Cleans up cookies after leaving a site. Not for everyone—it does

create a little extra work for you by making you log in more often and/or manage the list of sites that can set persistent cookies. But it does let you click agree

with less worry since

the cookie you agreed

to is going to be deleted.

-

Facebook Container because, well, Facebook.

-

NJS.

This minimal JavaScript disable/enable button can be a good way

to remove some intrusive data collection and growth hacking on sites where the

real content works without JavaScript.

-

Personal Blocklist

is surprisingly handy for removing domains that are

heavy on annoyances and surveillance, but weak on actual information, from

search results.

-

Privacy Badger blocks tracking scripts and will also turn on Global

Privacy control for you, by default. This will not have much of an impact

right away but will start to do

more and more as more companies come into compliance. (More companies

are required to comply with California privacy law than there are people who

understand how to comply California privacy law.)

Privacy tip: Authorized Agent and related services

These are relatively new, still getting more and more effective as more companies come into compliance.

If you have tried an authorized agent service in the past and gotten

nowhere, try again. Because of the Sephora case last year and the enforcement

sweep this year, companies are staffing up. And hey, if you have made it this

far, you could probably qualify for one of those privacy manager jobs.

Hope to see you at SCALE next year.

Conference program listing

Most of us have built up a collection of privacy tools, including browser extensions, settings, and services. But privacy threats keep changing, while at the same time new kinds of tools and services have become available. This talk will help maximize the value of your privacy toolset for today's best options, while helping you plan for the future.

Not only can you protect yourself as an individual, but your choices can help drive future investments away from surveillance into more productive areas. (Surveillance marketers and their investors think they can train us -- but with the right tools we can train them right back.)

Today most of us are at level 1 or 2 on privacy.

Level 1 You do something about privacy and take a mix of effective and ineffective actions

Level 2 You take mostly effective actions, but don't allocate your time and resources for maximum effect

Level 3 You take effective actions, efficiently selected and prioritized

Ready to level up? Now that California law codifies our right to check out how our personal information is shared, that means we have an opportunity to optimize our privacy toolkits and habits, and focus where it counts. We'll cover:

-

Ad blocker myths and facts, and why the surveillance marketing business loves some ad blockers

-

The most important privacy extensions for most people (and they're not what you'd think)

-

Corporate surveillance about you that never touches your device, and how to reach out and block it

-

Don't use the law on a problem that a tool can solve faster, but don't try to stretch a tool to solve a problem that needs the power of the law

-

Where to add extra protection for special cases

Each of your individual privacy choices has a bigger impact that just the protection that it provides for you. In the long run, your real impact will be not so much in how you’re protected as an individual, but in how you help drive future investments away from surveillance and toward more constructive projects.

Recent related links

How Can Advertisers Stop Funding Piracy? Block Sellers, Not Domains

This Is What Happened When I Tried Using AdChoices (Spoiler: It Didn’t Work)

Notes on Privacy Extremism

The Cookie Mullet

WTF is the global privacy control?

Big changes coming for GDPR enforcement on Big Tech in Europe?

Websites Selling Abortion Pills Are Sharing Sensitive Data With Google

The Triumph Of Safari, by Magic Lasso

Google to SCOTUS: Liability for promoting terrorist videos will ruin the Internet

Schools sue social networks, claim they “exploit neurophysiology” of kids’ brains

More states are probably going to get privacy laws this year, so those outside California will be able to join in.

More states are probably going to get privacy laws this year, so those outside California will be able to join in.