Shared posts

An April Offer on Inklings & Arthur

Obama's Lost Opportunity

There was much anticipation when Barack Obama was elected president in 2008. Much of the anticipation was because he was the first black president, but my anticipation was that he was the first politician in many years who could speak to the shared national concerns of both liberals and conservatives. Unfortunately, once he became president, that shared concern language quickly disappeared. I remember listening to his early presidential speeches hoping to hear that shared language, and being disappointed. In fact, I started to think that I had been overly optimistic of Obama's intellectual abilities. Then, recently, I read this article that saw Obama's oratorical failure in the same way, and I felt justified in my initial analysis:

But it was in public, when using his "coalition-building political voice," where he made his fatal mistake. Successful presidents have done precisely the opposite, using their "prophetic voice" to whip up popular energy and enthusiasm for their agenda and their "coalition-building political voice" to sell their colleagues on that agenda as something that is in the electoral interest of those same politicians. In short, instead of laying himself bare before the people and then managing their representatives carefully in private, Obama sought to manage the people carefully and then lay himself bare before their representatives, hoping to persuade the few where he knew he could not persuade the many.

Why had Obama stopped using his shared voice? Had he deemed it unnecessary since he was now elected? Did he fell it was ineffective? Had he gotten tired? The article suggests he never believed the shared language. We might never know, but it is terrible to see a lost opportunity, and one which seems even more elusive than it did when Obama was president.

View or Post CommentsMentally Ill U-Turn

Decades ago, the hospitalization of mentally ill people was commonplace. Inhumane treatment of the mentally ill in hospitals and an increasing emphasis on individual liberties eventually closed mental hospitals and greatly restricted the ability for governments to forcibly require mentally ill people to get treatment. After decades of an emphasis on the liberties of mentally ill people, a new focus on the cost of mentally ill people interacting with society has forced New York City to enact new policies to allow government departments to force mentally ill people to be treated. This could expand to other localities that are also struggling with mentally ill citizens. This 2005 video explains how many mentally ill people end up being treated in prison.

View or Post CommentsPavlo Golub: How to use PostgreSQL for (military) geoanalytics tasks by Taras Klioba

During the PGConf.EU 2023, my friend, colleague, and PostgreSQL Ukraine co-founder Taras Klioba delved into the fascinating intersection of geospatial analysis and modern warfare.

He decided not to publish a video record of his talk for obvious reasons. But now, he has shared the essential details of his talk in the form of a blog article. I want to boost this blog post for all Planet Postgres readers; that’s the primary purpose of the text you’re reading.

Taras’s article discusses the importance of geoanalytics in military operations due to the prevalence of geospatial data, focusing on utilizing PostgreSQL to process such data. It addresses everyday geoanalytical tasks such as finding K-nearest objects, distance calculations, and point within a polygon determination. It aims to provide practical examples and tips. Open-source data, specifically russian military facility data from OpenStreetMap, is used as a primary dataset. It is then imported into PostgreSQL using the osm2pgsql tool for analysis and optimization.

Additionally, fire data from NASA satellites is introduced as a second data source, sourced from the Fire Information for Resource Management System (FIRMS). FIRMS allows real-time monitoring of active fires worldwide, including within russian military facilities’ territory since 2022. The process involves downloading fire data using a specific script and importing it into a PostgreSQL database for further analysis.

For example, the text outlines a task of finding the K-nearest neighbors, precisely ten fires near the Shahed production plant in russia, known for manufacturing Iranian Shahed drones. It refers to previous research by the Molfar team for detailed information about the factory, located in the special economic zone Alabuga in Tatarstan. The text highlights the shift in factory priorities towards drone production following sanctions. It proposes a method involving creating a buffer around the target area and recursively expanding it until the desired results are obtained.

Please, read and share! Glory to Ukraine! 💙💛

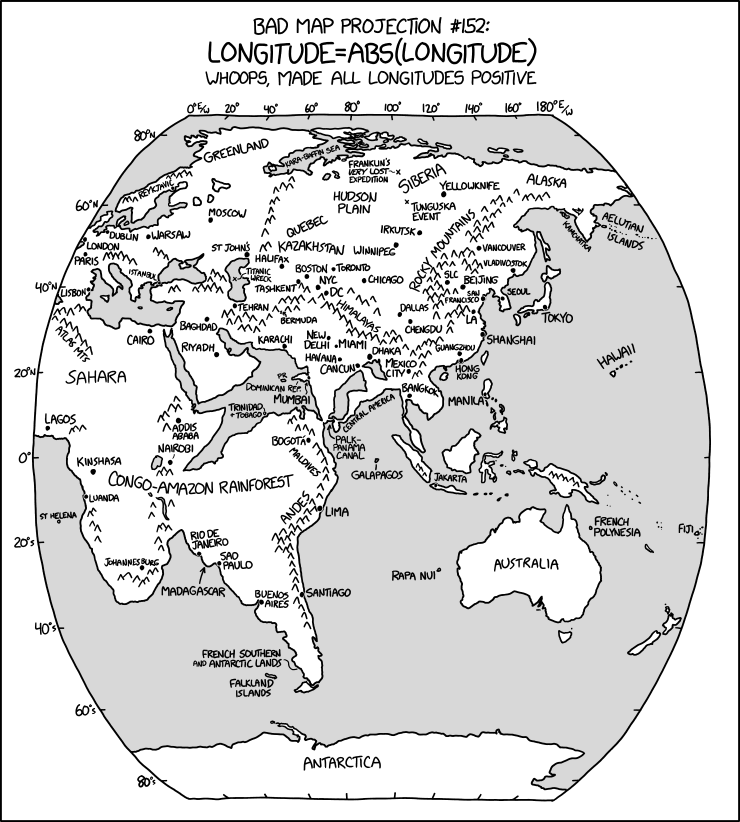

Paul Ramsey: XKCD Bad Map Projection with PostGIS

Last week, Randall Munroe dropped his latest XKCD "Bad Map Projection", number six, "ABS(Longitude)", which looks like this:

Truly this is a bad map projection, on a par with the previous five:

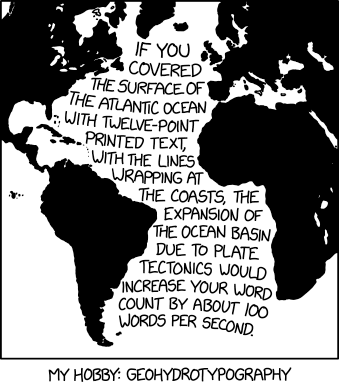

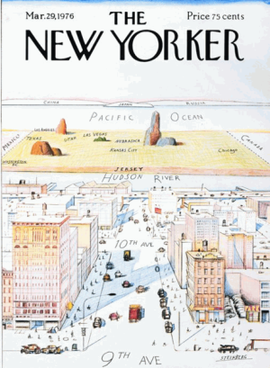

The last two are just applications of common map projections with very uncommon projection parameters that accentuate certain areas of the globe, a cartographic version of the classic "View of the World from 9th Avenue".

A colleague asked me if we could recreate ABS(Longitude) and I figured it was worth a try!

Getting Data

At a minimum, we want a countries layer and some independent place labels to provide context, which is available at the first stop for basic global data, Natural Earth.

We have been playing with ogr2ogr and weird remote access tricks lately, and we can use ogr2ogr to load the data in one step.

# Load the countries and places directly from the remote

# zip file into the working PostgreSQL database

ogr2ogr \

-f PostgreSQL \

-nlt PROMOTE_TO_MULTI \

-lco OVERWRITE=yes \

-lco GEOMETRY_NAME=geom \

postgresql://postgres@localhost/xkcd \

/vsizip//vsicurl/https://www.naturalearthdata.com/http//www.naturalearthdata.com/download/110m/cultural/ne_110m_admin_0_countries.zip

ogr2ogr \

-f PostgreSQL \

-lco OVERWRITE=yes \

-lco GEOMETRY_NAME=geom \

postgresql://postgres@localhost/xkcd \

/vsizip//vsicurl/https://www.naturalearthdata.com/http//www.naturalearthdata.com/download/110m/cultural/ne_110m_populated_places.zip

Preparing the Data

Now we have the data in the database, read to go!

The process we are going to apply will be transforming the shapes of one polygon at a time, and the Natural Earth data models the countries with one MultiPolygon per country.

Canada, for example, is one country, but 30 polygons.

We want a table with just one row for each polygon, so we "dump" all the multi-polygons using ST_Dump().

CREATE SEQUENCE country_id;

CREATE TABLE countries AS

SELECT nextval('country_id') AS id,

name,

(ST_Dump(geom)).geom::geometry(Polygon, 4326) AS geom

FROM ne_110m_admin_0_countries;

Next, because we are going to be processing western shapes with negative longitude different from eastern shapes we have to solve the problem: what to do with shapes that straddle the prime meridian?

The answer: ST_Split()!

First we create a prime meridian geometry to use as a "splitting blade".

CREATE TABLE lon_0 AS

SELECT ST_SetSrid(

ST_MakeLine(

ST_MakePoint(0,90),

ST_MakePoint(0,-90)),

4326)::geometry(LineString, 4326) AS geom;

Then we apply that blade to all the shapes that fall under it.

CREATE TABLE split_at_0 AS

SELECT id, name, ST_CollectionHomogenize(

ST_Split(c.geom, lon_0.geom))::geometry(MultiPolygon, 4326) AS geom

FROM countries c

JOIN lon_0

ON ST_Intersects(c.geom, lon_0.geom);

Surprisingly few countries end up chopped by the meridian.

The output of the split is, for each input polygon, a multi-polygon of the components. But we want to operate on the shapes one polygon at a time, so again, we must dump the multi-polygon into its components.

A slightly longer query dumps the split shapes, and stores them in a table with the rest of the un-split polygons, labeling each shape depending on whether it is "west" or "east" of the prime meridian.

CREATE TABLE countries_split AS

WITH split AS (

SELECT id, name, (ST_Dump(geom)).geom::geometry(Polygon, 4326) AS geom

FROM split_at_0

)

SELECT c.id, c.name, c.geom,

CASE WHEN ST_X(ST_StartPoint(c.geom)) >= 0 THEN 'east' ELSE 'west' END AS side

FROM countries c

LEFT JOIN split s

USING (id)

WHERE s.id IS NULL

UNION ALL

SELECT s.id, s.name, s.geom,

CASE WHEN ST_X(ST_StartPoint(s.geom)) >= 0 THEN 'east' ELSE 'west' END AS side

FROM split s;

We have divided the west from the east, and are ready for the final step.

Flipping the West

Now we are ready to apply a transformation to all the "west" countries, to turn their negative longitudes into positive ones.

To do this, we will use the powerful ST_Affine() function.

The two-dimensional form of the function looks like this:

ST_Affine(geom, a, b, d, e, xoff, yoff)

Where the parameters correspond to an affine transformation matrix:

a b xoff

d e yoff

0 0 1

Or, in equation form:

x' = a*x + b*y + xoff

y' = d*x + e*y + yoff

z' = z

From the equation it is pretty clear, we want to negate the input x and leave everything else alone.

- a = -1

- b = 0

- d = 0

- e = 1

In order to get a pretty map, we'd like the output data to be centered on the prime meridian again, so:

- xoff = -90

- yoff = 0

And in SQL like this:

CREATE TABLE countries_affine AS

SELECT id, name,

CASE WHEN SIDE = 'west'

THEN ST_Affine(geom, -1, 0, 0, 1, -90, 0)

ELSE ST_Affine(geom, 1, 0, 0, 1, -90, 0)

END AS geom

FROM countries_split;

CREATE TABLE places_affine AS

SELECT ogc_fid AS id, name,

CASE WHEN ST_X(geom) < 0

THEN ST_Affine(geom, -1, 0, 0, 1, -90, 0)

ELSE ST_Affine(geom, 1, 0, 0, 1, -90, 0)

END AS geom

FROM ne_110m_populated_places

ORDER BY pop_max DESC;

And the final result on the map looks like the XKCD map, without the pretty hand-labeling and mountains:

The bad map projections aren't the only cartographic cartoons XKCD explored. If you liked this one, take a look at:

- Map Projections, all real!

- World According to Americans

- Upside Down

- Map Age Guide, fascinating history!

INTENSION, EXTENSION AND R-TABLES (t&n)

Note: "Then & Now" (t&n) is a new version of what used to be the "Oldies but Goodies" (OBG) series. To demonstrate the superiority of a sound theoretical foundation relative to the industry's fad-driven "cookbook" practices, as well as the disregarded evolution/progress of RDM, I am re-visiting my old debunkings, bringing them up to the current state of knowledge. This will enable you to judge how well arguments have held up and realize the increasing gap between industry stagnation -- and scientific progress.

THEN: THE IMPORTANCE OF RELATIONAL TERMINOLOGY (t&n)

(email exchange with a reader originally published September 2002)

“Saw your latest and once again I think you have hit one of the many protruding nails on the head. Understanding one's data is so central and so crucial and yet so often ignored.

All this talk (not from you, I note) of silver bullets. Nothing new and I wonder if the paying customers and the big-ticket so-called technology strategy companies will ever wise up. Edward de Bono wrote of 'porridge words' that distract thought from the matter at hand. When used sparingly, they can facilitate new lines of thought but when, as they are in this field, they are used so casually and often they blur the real issues. All this technicalese of XML etcetera has this effect on me.

During one of the few times an employer allowed me to help people with logical design, I was having difficulty because the customer's IT staff knew very little English and had perhaps even less database background. I hit on the idea of explaining tables as relations and relations as sentences - sentences that must have the same 'size and shape'. Their faces seemed to light up and when they agreed that they had overloaded some of their tables, I was very pleased with myself. I felt vindicated a few weeks later when I read an article about predicates and propositions that Hugh Darwen had written in the now defunct DBPD magazine, put these thoughts much more precisely than I could, . Of course, the changes created new problems because the database product, like so many others, gave precious few ways to map the logical design to the physical one. But I regarded these as preferable problems since the staff was much more interested in the more concrete physical optimization techniques.

Without any disrespect to Dr. Codd (who I once met but was too awe-struck to ask any questions of), I have often thought that the language used by everybody in the field, with words such as "tables", nearly always brings connotations of physical arrangements to the mind of anybody who has done traditional programming. This seems unfortunate to me. Especially after I read Mr. McGoveran's proposals for results that might embody more than one table. (I wonder if these might not be part of the key for much better physical integration of databases with their visualization for users, not to mention smarter engines.)

I came across a site https://www.mcjones.org/System_R/ the other day, where a bunch of the System R people reminisced about its development on the occasion of, I think, the 25th anniversary of one of Codd's early papers. Presumably Mr. Date was absent from this gathering so that he could write his own most interesting history, which I remember reading five or six years ago. Anyway, I was struck again by how often their design decisions were either determined or distorted by physical considerations. And now, when many of the obstacles have been overcome courtesy of Moore's and other laws, some of those clever people seem regretful.

Also, please let me submit an historical, non-technical 'nit' to Mr. Date - I remember him writing that Codd did not coin the database term 'normalization of relations' as a result of R.M. Nixon's foreign policy excursion with China. But I also remember reading what I recall was an original interview with Dr. Codd in the DBMS magazine where he stated that this was the case. It's not really important, perhaps I'm just sensitive to it because I live in a country that established relations with modern China a year earlier!”

HypoPG 1.4.0 is out!

Taipei, Taiwan - May 27th, 2023

HypoPG 1.4.0

I'm pleased to announce the release of the version 1.4.0 of HypoPG, an extension adding support for Hypothetical Indexes, compatible with PostgreSQL 9.2 and above.

New features::

- Support hypothetically hiding existing indexes, hypothetical or not (github user nutvii and Julien Rouhaud)

Misc:

- Have hypopg_relation_size() error out rather than returning 0 if called for an oid that isn't a hypothetical index oid

- Slighthly reduce memory usage for hypothetical btree indexes without INCLUDE keys

Links & Credits

Thank to the users who reported bugs, they are all cited in the CHANGELOG file.

HypoPG is an open project. Any contribution to build a better tool is welcome. You just have to send your ideas, features requests or patches using the github repository at https://github.com/HypoPG/hypopg.

Links :

- Download: https://github.com/HypoPG/hypopg/releases

- Bug tracker: https://github.com/HypoPG/hypopg/issues

Unsavory Facts about King Charles to Remind the U.S. Why We Rejected Monarchy

Esse Olhos Irlandeses Não Piscam (uma matéria sobre a questão do aborto)

Esse Olhos Irlandeses Não Piscam

Phelim McAleer & Ann McElhinney: Jornalistas Merecedores do Nome

http://salvomag.com/new/articles/salvo42/these-irish-eyes-dont-blink.php

Phelim tinha visto muito nos seus vinte e cinco anos de jornalismo (ele começou sua carreira numa parte da Irlanda do Norte conhecida como “Território Bandido”), mas a evidência que ele viu na Sala 304 do Centro de Justiça da Filadélfia ultrapassou tudo o que ele havia encontrado anteriormente. As fotos exibidas numa telona – fotos de bebês bem formados, alguns cujo o pescoço tinha sido cortado com uma tesoura depois de nascerem vivos – eram mais horríveis que qualquer outra coisa que ele já tinha visto. Tudo isso já chocava por si só, mas foi ainda mais espantoso pra ele, como jornalista, ver que a galeria de imprensa atrás dele estava completamente vazia. Não havia nenhum jornalista de nível nacional cobrindo este caso. Nem um. Como assim?

Ele voltou pra casa em Los Angeles e contou para sua parceira e também esposa, a jornalista Ann McElhinney, que tinha encontrado o próximo projeto em que eles trabalhariam. Esse assunto era território desconhecido pra eles, totalmente fora da sua zona de conforto. Além do que, tanto ela quanto Phelim sempre se consideraram neutros com respeito a questão do aborto. Por que então entrar no ninho de vespas?

De qualquer forma, Phelim pediu as transcrições do tribunal e Ann os leu. Mais tarde, ela concordou que sim, eles iriam fazer um filme. Foi mais que uma concordância ou uma aptidão compartilhada. Foi uma convicção. Tinham uma informação importante de interesse público, e era uma vergonha ninguém estar publicando a respeito. Um filme sobre isso teria que ser feito; assim sendo, eles fariam.

Enquanto eles moravam na Romênia, no começo dos anos 2000, um alvoroço surgiu sobre uma mina de ouro na Transilvânia chamado de Projeto Rosia Montaña. Eles viram os ambientalistas ocidentais e grupos ativistas como o Greenpeace entrar com suas agendas, falando no lugar dos moradores como se esses não pudessem falar por si mesmos. Pior ainda, a mídia não estava reportando a verdade do assunto – que a vasta maioria dos locais queriam muito a mina.

A situação da Rosia Montaña provocou um tipo de conversão momentânea de 2 níveis. Eles viram que (1) o capitalismo era o sistema econômico que melhor se adequava pra tirar as pessoas da pobreza, e (2) que o jornalismo convencional não só estava fazendo um trabalho de péssima qualidade ao reportar a verdade mas era, certamente, corrupto, na medida que a narrativa desses ambientalistas externos estava sendo relatada, em vez da atual realidade do que acontecia. Então, em 2006, eles lançaram Mine Your Own Business, que contou a verdade sobre a vila mineira e também inspecionou outros projetos de mineração do mundo em desenvolvimento, que estavam sob ameaça da oposição por poderosos interesses externos.

Continuando com o tema do Grande Ambientalismo e o efeito que pode ter nas comunidades empobrecidas, em 2009 eles produziram Not Evil Just Wrong, que pesquisou e criticou a histeria sobre o aquecimento global. Então, em 2013, veio o FrackNation, na qual Phelim encarou ameaças, policiais e ações judiciais falsas ao contar as histórias dos americanos em zonas rurais cujos meio de subsistência estavam em risco por causa da fracturação hidráulica (NT. um método de extração de gases de rochas rasas).

Logo, enquanto o julgamento de um médico abortista parecia ser a mudança de direção num nível mais básico, foi, na verdade, a continuação dos trabalhos jornalísticos de reportar os fatos, histórias que os principais jornais estavam reportando erroneamante, ou, no caso de Gosnell, ignorando completamente.

Eles entraram num verdadeiro pesadelo acordado. Um gato dominava o pedaço e o cheiro de fezes e urina de gato junto com fenaldeído dominavam no ar. Havia sangue no chão, urina nas escadas e pilhas de lixo em todo o lugar. As cadeiras, cobertores, e toda a superfície estava coberta de pelo de gato, e o equipamento médico era anti-higiênico, antigo, enferrujado e jogado ao acaso, num variado estado de destruição.

Quanto mais ele olhavam ao redor, pior ficava. Um armário de metal guardava jarros com pés que foram cortados de bebês. Geladeiras e freezers estavam espalhados sobre o pavimento – junto com um labirinto contendo mais restos de fetos ensanguentados – eles foram colocados em jarros de água usados, jarros de leite, potes de comida de gato, sacolas plásticas e jarros de suco. O porão abrigava restos de fetos empilhados até o teto.

Parecia coisa de filmes de terror, mas esse não era um set de filmagens de Hollywood. Era vida real. Mulheres semi-conscientes gemiam na sala de espera, enquanto que nenhuma das pacientes pós operadas estavam conectadas a qualquer aparelho de monitoramento. Duas estavam com um sangramento tão forte e em estado de choque que os paramédicos foram chamados, apenas pra descobrir que a porta da saída de emergência havia sido trancada com cadeado, e ninguém encontrava a chave.

Enquanto isso, Gosnell queria fazer um aborto ao mesmo tempo que os investigadores faziam seu trabalho. Quando ele terminou, sentou-se em sua mesa com luvas cirúrgicas rasgadas e ensanguentadas e comeu seu jantar, gesticulando com seus hashi enquanto respondia as perguntas dos investigadores.

Claramente a equipe havia tropeçado numa cena de crime que foi além da corrida das drogas e uma more suspeita.

No final, a máquina do sistema de Justiça da Pensilvânia enviou Kermit Gosnell para prisão perpétua sem direito a liberdade condicional. O desafio para Phelim e Ann foi como documentar verdadeiramente e com tato as múltiplas realidades inquietantes deste caso.

Com grande profissionalismo eles entrevistaram oficiais da polícia local, da Agência de Fiscalização de Drogas, o FBI, as agências de Supervisão do Estado, juntamente com os funcionários da clínica e ex-pacientes. Por fim, Ann decidiu escrever um livro sobre o caso, além de fazer o filme. “É perturbador que essa história não seja amplamente conhecida”, ela explicou. E houve aspectos do caso que não estariam no filme, mas que seriam gravados. “As pessoas deveriam saber essas coisas”, ela disse, com seu sotaque Irlandês acentuando sua convicção.

O resultado é Gosnell, A História Não Contada do Serial Killer mais Produtivo da América, uma virada de página jornalística pro caso, que é necessário ler para crer. E quando você acha que não pode ficar pior, fica. Ao longo do caminho Ann expõe uma falha atrás da outra, claramente nomeando os oficiais cujas responsabilidades era fazer cumprir a lei ou garantir que os padrões médicos que protegessem as mulheres e crianças fossem mantidos, mas que ignoraram os sinais claros, fizeram vistas grossas ou desprezaram a lei. A mídia local ou nacional não fez melhor.

Ela admite que foi difícil:

Ler o testemunho e verificar a evidência na pesquisa pra esse livro e pra escrever o script do filme foi brutal. Eu chorei sobre meu computador. Eu orei o Pai Nosso na minha mesa de trabalho. Eu não sou um exemplo de santidade – não havia orado por muitos anos – mas quando fui confrontada com o pior dessa história eu não sabia o que mais poderia fazer.

Mais Momentos de Conversão

Semelhantemente, as vozes no seu livro e no filme não são vozes pró-vida. O testemunho mais poderoso no julgamento, Ann disse, foram aqueles dos próprios médicos abortistas, ao descrever o que constituía “um bom aborto legal”. Quase todos os jurados eram pró-escolha no começo, mas alguns deixaram escapar suspiros audíveis quando uma testemunha especialista em aborto explicou detalhadamente o que ela fez. Não foi só Phelim, Ann, e membros do juri que reexaminaram suas opiniões. “Procuradores, diversos jornalistas e até o próprio advogado de Gosnell experimentaram mudança de coração e mente”, Ann escreveu.

"Basicamente, uma vez que você descobre a verdade sobre o Aborto, você deixa a narrativa fácil de ser a favor do aborto muito rápido”, disse Phelim. “Aborto é como um artigo de fé pra algumas pessoas, sabia? Eles não pensam muito sobre isso, mas eles apenas são pró-aborto. Posso dizer, a fé deles foi quebrada. A fé de todos foi quebrada.”

Mudar a mente das pessoas, no entanto, não é uma coisa que eles pretendem fazer. “Costumava-se dizer no meio jornalístico, se você quer mandar uma mensagem, mande um Telex”, Phelim disse. “Nós não estamos aqui para mandar uma mensagem. Nós estamos aqui contar a verdade”. Então, quanto a Gosnell, “nossa mensagem, tanto para quem é pró-vida quanto pró-escolha é, encontre a verdade. Tome uma decisão informada. Porque quando você encontrar a verdade sobre o aborto, sendo pró-aborto, essa verdade vai abalar sua confiança nesta sua posição. E é exatamente isso que o jornalismo deve fazer”.

SQLreduce: Reduce verbose SQL queries to minimal examples

SQLsmith has proven to be an effective tool for finding bugs in different areas in the PostgreSQL server and other products, including security bugs, ranging from executor bugs to segfaults in type and index method implementations.

However, the random queries generated by SQLsmith that trigger some error are most often very large and contain a lot of noise that does not contribute to the error. So far, manual inspection of the query and tedious editing was required to reduce the example to a minimal reproducer that developers can use to fix the problem.

This issue is solved by SQLreduce. SQLreduce takes as input an arbitrary SQL query which is then run against a PostgreSQL server. Various simplification steps are applied, checking after each step that the simplified query still triggers the same error from PostgreSQL. The end result is a SQL query with minimal complexity.

SQLreduce is effective at reducing the queries from original error reports from SQLsmith to queries that match manually-reduced queries.

More details on how it works in a blog post.

Example

In 2018, SQLsmith found a segfault in PostgreSQL running Git revision 039eb6e92f. The reproducer back then was a huge 40-line, 2.2kB query:

select

case when pg_catalog.lastval() < pg_catalog.pg_stat_get_bgwriter_maxwritten_clean() then case when pg_catalog.circle_sub_pt(

cast(cast(null as circle) as circle),

cast((select location from public.emp limit 1 offset 13)

as point)) ~ cast(nullif(case when cast(null as box) &> (select boxcol from public.brintest limit 1 offset 2)

then (select f1 from public.circle_tbl limit 1 offset 4)

else (select f1 from public.circle_tbl limit 1 offset 4)

end,

case when (select pg_catalog.max(class) from public.f_star)

~~ ref_0.c then cast(null as circle) else cast(null as circle) end

) as circle) then ref_0.a else ref_0.a end

else case when pg_catalog.circle_sub_pt(

cast(cast(null as circle) as circle),

cast((select location from public.emp limit 1 offset 13)

as point)) ~ cast(nullif(case when cast(null as box) &> (select boxcol from public.brintest limit 1 offset 2)

then (select f1 from public.circle_tbl limit 1 offset 4)

else (select f1 from public.circle_tbl limit 1 offset 4)

end,

case when (select pg_catalog.max(class) from public.f_star)

~~ ref_0.c then cast(null as circle) else cast(null as circle) end

) as circle) then ref_0.a else ref_0.a end

end as c0,

case when (select intervalcol from public.brintest limit 1 offset 1)

>= cast(null as "interval") then case when ((select pg_catalog.max(roomno) from public.room)

!~~ ref_0.c)

and (cast(null as xid) <> 100) then ref_0.b else ref_0.b end

else case when ((select pg_catalog.max(roomno) from public.room)

!~~ ref_0.c)

and (cast(null as xid) <> 100) then ref_0.b else ref_0.b end

end as c1,

ref_0.a as c2,

(select a from public.idxpart1 limit 1 offset 5) as c3,

ref_0.b as c4,

pg_catalog.stddev(

cast((select pg_catalog.sum(float4col) from public.brintest)

as float4)) over (partition by ref_0.a,ref_0.b,ref_0.c order by ref_0.b) as c5,

cast(nullif(ref_0.b, ref_0.a) as int4) as c6, ref_0.b as c7, ref_0.c as c8

from

public.mlparted3 as ref_0

where true;

SQLreduce can effectively reduce that monster to just this:

SELECT pg_catalog.stddev(NULL) OVER () AS c5 FROM public.mlparted3 AS ref_0

Availability

SQLreduce is open source licensed under the MIT license. The source code is on GitHub: https://github.com/credativ/sqlreduce

Debian/Ubuntu packages for sqlreduce are shipped on apt.postgresql.org.

SQLreduce is an open source product by credativ GmbH.

Electric Vehicle Innovator Elon Musk Calls for More American Oil and Gas Production

Nikolay Samokhvalov: How partial, covering, and multicolumn indexes may slow down UPDATEs in PostgreSQL

"The Harper."

"The Harper."

by Vlas Mikhailovich Doroshevich (1864--1922).

The Emperor Jin-La-O, may his memory be sacred to the whole braid-adorned world, was a wise and just Emperor.

One day he summoned his entourage and said to them:

- I would like to learn the name of the greatest villain in all of Beijing - in order to punish him appropriately, frighten the wicked and encourage the virtuous.

The courtiers bowed at his feet and set forth. For three days and three nights they walked around Beijing, visiting bazaars, teahouses, opium dens, temples and generally places where people crowd. They listened attentively.

And on the fourth day they came to the Emperor, bowed at his feet and said:

- We did all that our humble strengths could do to fulfill your heavenly will. And indeed they had.

- Do you now know who is the greatest villain in Beijing? - asked the Emperor.

- Yes, Master of the Universe. We know him. -- His name? - Jian-Fu.

- What has this scoundrel been up to? - exclaimed, boiling over with noble indignation, the Emperor.

- He plays the harp! - the emissaries answered.

- What crimes does this harper commit? Does he kill people? - asked the Emperor.

-- No.

- Does he rob?

-- No.

- Does he steal?

-- No.

- But what, at last, are the incredible doings of this man? - exclaimed the Emperor, lost in conjecture.

- Exactly nothing! - the emissaries answered. - He only plays the harp. And he plays splendidly, I must confess. You yourself, the Lord of the Sun and the Lord of the Universe, have repeatedly deigned to listen to his playing and even approved of it.

-- Yes Yes! Now I recall! Harper Jian-Fu! I recall. An excellent harper! But why do you consider him to be the greatest villain in Beijing?

The courtiers bowed and answered:

- Because all of Beijing scolds him. "Scoundrel Jian-Fu"! "Swindler Jian-Fu"! "Villain Jian-Fu"! - is all you hear at every step you take. We went around all the temples, all the bazaars, all the teahouses, all the places where people crowd - and everywhere everyone spoke only of Jian-Fu. And when they spoke of him, they did nothing but to scold him.

-- Strange! - exclaimed the Emperor. - No, there is surely something amiss here!

And he decided to investigate the peculiar matter himself. He disguised himself as a commoner and, accompanied by two similarly-disguised bodyguards, set off to wander the streets of Beijing. He came to the bazaar.

The morning market was over, the merchants were folding their baskets and chatting among themselves.

- This wretch Jian-Fu! - shouted one of the traders. - He played a sad song again last night at the new moon holiday. If only he would play something merry!

- Don't hold your breath! the other laughed viciously. - Can this scoundrel even play merry songs?! Making merry is for those whose souls are as white as a tea-tree flower. But this swindler has a soul as black as ink. That's why he plays only sad songs.

- How does such a villain cheat the gallows! someone in the crowd exclaimed.

- He ought to be cut in half with a blunt saw, and certainly the long way through! - corrected a neighbor.

- No, tie him to two horses by the arms and legs and tear him apart!

- Put him in a sack with cats that have not been fed for a long time!

And everyone shouted:

- Villain Jian-Fu! - Scoundrel Jian-Fu! - How does the earth stand him!

The Emperor went to the tea house.

Visitors sat on mats and drank tea from tiny cups.

- Good afternoon, good people! Let the souls of your ancestors whisper good advice to your souls! - greeted the Emperor, entering and bowing. - What's new in Beijing?

- Why, before you got here, we were just talking about the villain Jian-Fu! - said one of those present. - Has he done something? - asked the Emperor. -- What? Didn't you hear? The whole city is talking about it! - exclaimed all around. - Yesterday he accidentally hooked his fingernail on the wrong string and hit the wrong note! Scoundrel!

- What a horror it was! one of them exclaimed, pretending to writhe.

- And yet he hasn't been hanged yet! - Nor torn to pieces!

And all, indignant to the depths of their souls, exclaimed:

- Scoundrel Jian-Fu! - Swindler Jian-Fu! - Villain Jian-Fu!

The Emperor went to the opium den. There was a terrible din there.

-- What happened? - asked the Emperor.

-- A! As always! They are arguing about Jian-Fu! - the owner waved his hand.

The smokers, lying on their cots, scolded Jian-Fu through and through.

- He played five songs yesterday! one shouted. - As if two weren't enough! - He played five songs yesterday! - grumbled another. - As if he couldn't have played seven or eight!

And they scolded Jian-Fu until they fell asleep with their eyes open.

And even then, they muttered in their sleep: - That villain Jian-Fu! - Scoundrel Jian-Fu!

- Swindler to rule all swindlers, Jian-Fu!

The Emperor went to the temple.

People prayed to the gods, but when they got tired of praying, they began to exchange remarks and whispered to each other:

- And Jian-Fu, it so happens, is a scoundrel!

In short, until nightfall the Emperor walked around the whole city and everywhere heard only: - Jian-Fu! Jian-Fu! Jian-Fu! The villain! Scoundrel! Swindler! Finally, in the evening, returning home, he stopped on his way at the house of a poor coolie and, wishing the hosts a good supper, asked:

- Have you heard the harper Jian-Fu?

- How could we have! - answered the poor coolie. - Do you think we have time for entertainment or money to pay to hear harp music! We haven't even enough for rice! But we still know that Jian-Fu is a scoundrel! All of Beijing is talking about it.

And the whole family set off nitpicking the playing of a man whom they had never seen or heard, and said: - That villain Jian-Fu! - Scoundrel Jian-Fu! - Swindler Jian-Fu!

The Emperor, returning to the palace, was beside himself with amazement.

- What could all of this mean?

And, despite the late hour, he ordered that Jian-Fu be found right away and brought to him.

The harper was found and immediately brought to the Emperor.

- Hello, Jian-Fu! - said the Emperor. - Do you know that all of Beijing is scolding no one but you?

- I know, Heavenly Wisdom! - replied Jian-Fu as he bowed low.

- All they do is nitpick your playing! They pick on such trifles that it's simply awful. And they scold you for such little things through and through!

- I know, Heavenly Wisdom! - babbled Jian-Fu.

- So why is this happening?

- This happens, it turns out, for a very simple reason! - answered Jian-Fu. They are not allowed to discuss anything except for my harp-playing. So they singled me out for nitpicking and scolding.

The Emperor put his finger to his forehead and said:

-- A!

And he banned all discussion of Jian-Fu's playing as well. The Emperor Jin-La-O was a just Emperor.

Extending the Danish-Hungarian Empire

Ezra Bible App 1.1 released!

Ezra Bible App 1.1 has been released! This release features an emoji picker for tags and notes, a language selection box in the options menu, a possibility to load the Bible text chapter by chapter instead of fully for the whole book and various other enhancements. Furthermore, a Romanian locale has been added. For more details and download links, please see the release note on GitHub.

The Complex Cultural Issues Surrounding Abortion, Including Sex-Trafficking and Pornography

The Gaslighting of the American People

Kaarel Moppel: Running Postgres in Docker – why and how?

The big question we hear quite often is, “Can and should we run production Postgres workloads in a Docker? Does it work?” The answer in short: yes, it will work… if you really want it to… or if it’s all only fun and play, i.e. for throwaway stuff like testing.

Containers, commonly also just called Docker, have definitely been a thing for quite a few years now. (There are other popular container runtimes out there, and it’s not a proprietary technology per se, but let’s just say Docker to save on typing.) More and more people are “jumping on the container-ship” and want to try out Docker, or have already given this technology a go. However, containers were originally designed more as a vehicle for code; they were initially intended to provide a worry-free “batteries included” deployment experience. The idea is that it “just works” anywhere and is basically immutable. That way, quality can easily be tested and guaranteed across the board.

Those are all perfectly desirable properties indeed for developers…but what if you’re in the business of data and database management? Databases, as we know, are not really immutable – they maintain a state, so that code can stay relatively “dumb” and doesn’t have to “worry” about state. Statelessness enables rapid feature development and deployment, and even push-button scaling – just add more containers!

Should I use Postgres with Docker?

If your sensors are halfway functional, you might have picked up on some concerned tones in that last statement, meaning there are some “buts” – as usual. So why not fully embrace this great modern technology and go all in? Especially since I already said it definitely works.

The reason is that there are some aspects you should at least take into account to avoid cold sweats and swearing later on. To summarise: you’ll benefit greatly for your production-grade use cases only if you’re ready to do the following:

a) live fully on a container framework like Kubernetes / OpenShift

b) depend on some additional 3rd party software projects not directly affiliated with the PostgreSQL Global Development Group

c) or maintain either your own Docker images, including some commonly needed extensions, or some scripts to perform common operational tasks like upgrading between major versions.

To reiterate – yes, containers are mostly a great technology, and this type of stuff is interesting and probably would look cool on your CV…but: the origins of container technologies do not stem from persistent use cases. Also, the PostgreSQL project does not really do much for you here besides giving you a quick and convenient way to launch a standard PostgreSQL instance on version X.

A testers’ dream

Not to sound too discouraging – there is definitely at least one perfectly valid use case out there for Docker / containers: it’s perfect for all kinds of testing, especially for integration and smoke testing!

Since containers are basically implemented as super light-weight “mini VMs”, you can start and discard them in seconds! That, however, assumes that the image has already been downloaded. If not, then the first launch will take a minute or two, depending on how good your internet connection is

As a matter of fact, I personally usually have all the recent (9.0+) versions of Postgres constantly running on my workstation in the background, via Docker! I don’t of course use all those versions too frequently – however, since they don’t ask for too much attention, and don’t use up too many resources if “idling”, they don’t bother me. Also, they’re always there for me when I need to test out some Postgres statistic fetching queries for our Postgres monitoring tool called pgwatch2. The only annoying thing that could pester you a bit is – if you happen to also run Postgres on the host machine, and want to take a look at a process listing to figure out what it’s doing, (e.g. `ps -efH | grep postgres`) the “in container” processes show up and somewhat “litter” the picture.

Slonik in a box – a quickstart

OK, so I want to benefit from those light-weight pre-built “all-inclusive” database images that everyone is talking about and launch one – how do I get started? Which images should I use?

As always, you can’t go wrong with the official stuff – and luckily, the PostgreSQL project provides all modern major versions (up to v8.4 by the way, released in 2009!) via the official Docker Hub. You also need to know some “Docker foo” of course. For a simple test run, you usually want something similar to what you can see in the code below.

NB! As a first step, you need to install the Docker runtime / engine (if it is not already installed) – which I’ll not be covering as it should be a simple process of following the official documentation line by line.

Also note: when launching images, we always need to explicitly expose or “remap” the default Postgres port to a free port of our preference. Ports are the “service interface” for Docker images, over which all communication normally happens, so that we actually don’t need to care about how the service is internally implemented!

# Note that the first run could take a few minutes due to the image being downloaded…

docker run -d --name pg13 -p 5432:5432 -e POSTGRES_HOST_AUTH_METHOD=trust postgres:13

# Connect to the container that’s been started and display the exact server version

psql -U postgres -h localhost -p 5432 -c "show server_version" postgres

server_version

────────────────────────────────

13.1 (Debian 13.1-1.pgdg100+1)

(1 row)

Note that you don’t have to actually use “trust” authentication, but can also set a password for the default “postgres” superuser via the POSTGRES_PASSWORD env variable.

Once you’ve had enough of Slonik’s services for the time being, just throw away the container and all the stored tables / files etc with the following code:

# Let’s stop the container / instance docker stop pg13 # And let’s also throw away any data generated and stored by our instance docker rm pg13

Couldn’t be any simpler!

NB! Note that I could also explicitly mark the launched container as “temporary” with the ‘–rm’ flag when launching the container, so that any data remains would automatically be destroyed upon stopping.

Peeking inside the container

Now that we have seen how basic container usage works, complete Docker beginners might get curious here – how does it actually function? What is actually running down there inside the container box?

First, we should probably clear up the two concepts that people often initially mix up:

- A Docker image: images are immutable “batteries (libraries) included” software packages that you can download from some public or private Docker registry or build yourself, that then can be “instantiated”, i.e. launched.

- A Docker container: once we have launched an image, we’re dealing with a “live clone” that should actually be called a container! And now, its files can be modified, although in theory this freedom should not be overused – or at least not in a direct manner without volumes (see below).

Let’s make sense of this visually:

# Let’s take a look at available Postgres images on my workstation # that can be used to start a database service (container) in the snappiest way possible docker images | grep ^postgres | sort -k2 -n postgres 9.0 cd2eca8588fb 5 years ago 267MB postgres 9.1 3a9dca7b3f69 4 years ago 261MB postgres 9.2 18cdbca56093 3 years ago 261MB postgres 9.4 ed5a45034282 12 months ago 251MB postgres 9.5 693ab34b0689 2 months ago 197MB postgres 9.6 ebb1698de735 6 months ago 200MB postgres 10 3cfd168e7b61 3 months ago 200MB postgres 11.5 5f1485c70c9a 16 months ago 293MB postgres 11 e07f0c129d9a 3 months ago 282MB postgres 12 386fd8c60839 2 months ago 314MB postgres 13 407cece1abff 14 hours ago 314MB # List all running containers docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 042edf790362 postgres:13 "docker-entrypoint.s…" 11 hours ago Up 11 hours 0.0.0.0:5432->5432/tcp pg13

Other common tasks when working with Docker might be:

* Checking the logs of a specific container, for example, to get more insights into query errors

# Get all log entries since initial launch of the instance docker logs pg13 # “Tail” the logs limiting the initial output to last 10 minutes docker logs --since "10m" --follow pg13

* Listing the IP address of the image

Note that by default, all Docker containers can speak to each other, since they get assigned to the default subnet of 172.17.0.0/16. If you don’t like that, you can also create custom networks to cordon off some containers, whereby then they can also access each other using the container name!

# Simple ‘exec’ into container approach

docker exec -it pg13 hostname -I

172.17.0.2

# A more sophisticated way via the “docker inspect” command

docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' pg13

* Executing custom commands on the container

Note that this should be a rather rare occasion, and is usually only necessary for some troubleshooting purposes. You should try not to install new programs and change the files directly, as this kind of defeats the concept of immutability. Luckily, in the case of official Postgres images it can be easily done, since it runs under “root” and the Debian repositories are also still connected – which a lot of images remove, in order to prevent all sorts of maintenance nightmares.

Here is an example of how to install a 3rd party extension. By default, we only get the “contrib extensions” that are part of the official Postgres project.

docker exec -it pg13 /bin/bash # Now we’re inside the container! # Refresh the available packages listing apt update # Let’s install the extension that provides some Oracle compatibility functions... apt install postgresql-13-orafce # Let’s exit the container (can also be done with CTRL+D) exit

* Changing the PostgreSQL configuration

Quite often when doing some application testing, you want to measure how much time the queries really take – i.e. measure things from the DB engine side via the indispensable “pg_stat_statements” extension. This can be done relatively easily, without going “into” the container! Starting from Postgres version 9.5, to be exact…

# Connect with our “Dockerized” Postgres instance psql -h localhost -U postgres postgres=# ALTER SYSTEM SET shared_preload_libraries TO pg_stat_statements; ALTER SYSTEM postgres=# ALTER SYSTEM SET track_io_timing TO on; ALTER SYSTEM # Exit psql via typing “exit” or pressing CTRL+D # and restart the container docker restart pg13

Don’t forget about the volumes

As stated in the Docker documentation: “Ideally, very little data is written to a container’s writable layer, and you use Docker volumes to write data.”

The thing about containers’ data layer is that it’s not really meant to be changed! Remember, containers should be kind of immutable. The way it works internally is via “copy-on-write”. Then, there’s a bunch of different storage drivers used over different versions of historical Docker runtime versions. Also, there are some differences which spring from different host OS versions. It can get quite complex, and most importantly, slow on the disk access level via the “virtualized” file access layer! It’s best to listen to what the documentation says, and set up volumes for your data to begin with.

Aha, but what are volumes, exactly? They’re directly connected and persistent OS folders where Docker tries to stay out of the way as much as possible. That way, you don’t actually lose out on file system performance and features. The latter is not really guaranteed, though – and can be platform-dependent. Things might look a bit hairy, especially on Windows (as usual), where one nice issue comes to mind. The most important keyword here might be “persistent” – meaning volumes don’t disappear, even when a container is deleted! So they can also be used to “migrate” from one version of the software to another.

How should you use volumes, in practice? There are two ways to use volumes: the implicit and the explicit. The “fine print” by the way, is available here.

Also, note that we actually need to know beforehand what paths should be directly accessed, i.e. “volumized”! How can you find out such paths? Well, you could start from the Docker Hub “postgres” page, or locate the instruction files (the Dockerfile) that are used to build the Postgres images and search for the “VOLUME” keyword. The latter can be found for Postgres version 13 here.

# Implicit volumes: Docker will automatically create the left side folder if it is not already there docker run -d --name pg13 -p5432:5432 -e POSTGRES_HOST_AUTH_METHOD=trust \ -v /mydatamount/pg-persistent-data:/var/lib/postgresql/data \ postgres:13 # Explicit volumes: need to be pre-initialized via Docker docker volume create pg13-data docker run -d --name pg13 -p5432:5432 -e POSTGRES_HOST_AUTH_METHOD=trust \ -v pg13-data:/var/lib/postgresql/data \ postgres:13 # Let’s inspect where our persistent data actually “lives” docker volume inspect pg13-data # To drop the volume later if the container is not needed anymore use the following command docker volume rm pg13-data

Some drops of tar – big benefits possible, with some drawbacks

To tie up the knots on this posting – if you like containers in general, and also need to run some PostgreSQL services – go ahead! Containers can be made to work pretty well, and for bigger organizations running hundreds of PostgreSQL services, it can actually make life a lot easier and more standardized once everything has been automated. Most of the time, the containers won’t bite you.

But at the same time, you had better be aware of the pitfalls:

- Docker images and the whole concept of containers are actually optimized for the lightning-fast and slim startup experience so that by default even the data is not properly separated into a separate persistence unit! Which for databases could end in a catastrophe, if appropriate measures (volumes) are not put into place.

- Using containers won’t give you any automatic and magical high-availability capabilities. This usually comes provided by the container framework – either via some simple “stateful sets”, or more advanced “operators”, or via cleverly bundled database and “bot” images which rely on a central highly-available consensus database.

- Life will be relatively easy only when you go “all in” on some container management framework like Kubernetes, and additionally select some “operator” software (Zalando and Crunchy Postgres operators are the most popular ones, I believe) to take care of the nitty-gritty.

- Batteries are not included: you pretty much only get persistence and a running Postgres major version! For example, a very common task – major version upgrades – is surprisingly out of scope for the default Postgres images! It is also out of scope for some Kubernetes operators – this means you need to be ready to get your hands dirty and create some custom intermediate images, or find some 3rd party ones like Spilo.

TLDR;

Don’t want to sound like a luddite again, but before going “all in” on containers you should acknowledge two things. One, that there are major benefits to production-level database containers only if you’re using some container automation platform like Kubernetes. Two, the benefits will come only if you are willing to make yourself somewhat dependent on some 3rd party software vendors. 3rd party vendors are not out to simplify the life of smaller shops, but rather cater to bigger “K8s for the win” organizations. Often, they encode that way of thinking into the frameworks, which might not align well with your way of doing things.

Also, not all aspects of the typical database lifecycle are well covered. My recommendation is: if it currently works for you “as is”, and you’re not 100% migrating to some container-orchestration framework for all other parts of your software stack, be aware that you’re only winning in the ease of the initial deployment and typically also in automatic high-availability (which is great of course!) – but not necessarily in all aspects of the whole lifecycle (fast major version upgrades, backups the way you like them, access control, etc).

On the other hand – if you feel comfortable with some container framework like Kubernetes and/or can foresee that you’ll be running oodles of database instances – give it a go! — after you research possible problem points, of course.

On the positive side – since I am in communication with a pretty wide crowd of DBA’s, I can say that many bigger organizations do not want to look back at the traditional way of running databases after learning to trust containers.

Anyway, it went a bit long – thanks for reading, and please do let me know in the comments section if you have some thoughts on the topic!

The post Running Postgres in Docker – why and how? appeared first on Cybertec.

Database Lab Engine 2.1.0 released

Database Lab Engine is an open-source tool for building powerful development and testing environments based on thin cloning of PostgreSQL databases. Using Database Lab open-source CLI or API (and if you are using Database Lab SaaS, proprietary GUI), on a single machine with, say, a 1 TiB disk, you can easily create and destroy dozens of database copies of size 1 TiB each. All these copies are independently modifiable and created/destroyed in just a few seconds. This can become a game-changer in your development and testing workflow, improving time-to-market, and reducing costs of your non-production infrastructure.

In version 2.1 contains numerous improvements. Main new features are:

- Better data protection and security:

- robust configuration defining how data is patched when snapshots are automatically created (both shell and SQL scripts are now supported),

- new option: preserve passwords for the existing DB users.

- [experimental] DLE API and the CLI tool are extended to have a new feature: “CI Observer” helping control DB schema changes a.k.a. DB migrations (see CLI reference, watch demo). This is a small step towards the big goal: have 100% coverage for testing DB migrations in CI using full-sized thin clones.

Links:

- Database Lab Engine 2.1.0 release notes

- Resources – an interactive tutorial, case studies

- Blog

- Tutorial for RDS users

- Tutorial for any PostgreSQL database

- Database Lab Engine configuration reference

Any feedback is highly appreciated:

- Twitter: @Database_Lab

- Community Slack (English), and Telegram group (Russian)

- Database Lab Engine repository, with the issue tracker

![And over heeee[...]eeeere (i)s Saturn. And over heeee[...]eeeere (i)s Saturn.](https://imgs.xkcd.com/comics/space_typography.png)