Okay, no sex, but a discussion about quantum computers.

Steven Soderbergh directed the famous movie: Sex, Lies, and Videotape. This 1989 movie won the Palme d’Or at the 1989 Cannes Film Festival, and is now in the United States Library of Congress’ National Film Registry for being “culturally, historically, or aesthetically significant.”

Today I want to explore claims about quantum computation.

With all due apologies to Soderbergh his title seems perfect for our discussion. There may be no sex, but there is plenty of sizzle surrounding quantum computation. We just finished a thorough and wonderful debate here on the feasibility of quantum computers–see this for part of the debate. It was ably moderated by Ken, and the two advocates were Gil Kalai, against, and Aram Harrow, for.

While there are still many interesting issues to add to the debate, our discussion is not about whether quantum computers are feasible or not. We will stipulate that they can and will be built, eventually in some future time. The issue is about the present:

What has been proved about them so far?

And sadly we will discuss: what is believed to be proved but has no proof.

Claims

There are many claims in the literature on quantum computers that I would like to address here. Some are right, some are wrong, and some are at best misleading. Let’s start.

Quantum Computers have been proved to be more powerful than classical. Wrong.

This has been repeated many times and is often claimed in the literature. But there is no mathematical proof that a quantum computer that runs in polynomial quantum time cannot be simulated in polynomial classic time. None. Let me repeat that. There is no such proof. It is an open problem whether

If this is true, then quantum polynomial time equals polynomial time. Okay, most do not believe that this is true, but we are talking about what is proved. Nor is there any speedup theorem about improving the exponent  in a general

in a general  -time algorithm when you have

-time algorithm when you have  qubits vis-à-vis

qubits vis-à-vis  bits. Thus from a mathematical view point it is clear that there is no proof that quantum is better than classical. None. Zero.

bits. Thus from a mathematical view point it is clear that there is no proof that quantum is better than classical. None. Zero.

Quantum Computers can harness exponential parallelism, trying every possible solution at once. At best half-true.

Quantum computers can create superpositions of  -many basis vectors, each representing a string in

-many basis vectors, each representing a string in  that can be a trial solution. However, the best-known quantum algorithm for finding a single solution still has exponential running time, order-of

that can be a trial solution. However, the best-known quantum algorithm for finding a single solution still has exponential running time, order-of  , and this is tight in black-box models (see below). The allowed linear algebra operations restrict the way this parallelism can be exploited. Scott Aaronson in particular has expended much effort debunking claims that quantum computers are imminently effective at solving certain NP-hard problems.

, and this is tight in black-box models (see below). The allowed linear algebra operations restrict the way this parallelism can be exploited. Scott Aaronson in particular has expended much effort debunking claims that quantum computers are imminently effective at solving certain NP-hard problems.

Quantum Computers can factor integers faster than classical ones are known to. Right.

But misleading, especially when the “are known to” part is sloughed off. There is no proof that factoring cannot be done classically in polynomial time. None. The best factoring algorithms are indeed super-polynomial, but there is no mathematical proof that they are optimal. So tomorrow, or next week, or secretly already?, there could be a classical polynomial time factoring algorithm. Peter Sarnak, for example, is on the record as believing this. I am too. But beliefs aside, there certainly could be such an algorithm.

Quantum Computers have been proved to be more powerful than classical in the black box model. Right. But this is at best misleading; at worst  There are proofs that quantum machines can out-perform classical in this model. But the model is unfair. The classic machine gets only oracle access to some black box function say

There are proofs that quantum machines can out-perform classical in this model. But the model is unfair. The classic machine gets only oracle access to some black box function say  . Its job is to find some

. Its job is to find some  so that

so that  has some property. As with oracle results in complexity theory, it is often easy to show that this requires a huge exponential search.

has some property. As with oracle results in complexity theory, it is often easy to show that this requires a huge exponential search.

What about the quantum machines? They can zip along and solve the problem in polynomial time. So this proves they are more powerful—right? No. The difficulty is that the quantum machine needs to have the function  encoded as part of its quantum circuit. So the quantum computation gets the circuit representation of the black box, or the box is not black. The box is a white box—we can see the wires and gates that implement it.

encoded as part of its quantum circuit. So the quantum computation gets the circuit representation of the black box, or the box is not black. The box is a white box—we can see the wires and gates that implement it.

This seems unfair, and is at best misleading. The fair comparison is to allow the classic machine the same ability to see the circuit representation of the box. The trouble now is the proof disappears. Without the black box restriction there is no mathematical proof that a classic machine cannot unravel what the box does and cheat in some way. So this is at best misleading. We also tried to see what happens if we open the box for the classical algorithm, here and here.

Quantum Computers cannot be efficiently simulated by classical, even for fifty qubits.Wrong.

I heard this the other day, and also it is stated on the web. For instance, Neil Gershenfeld was quoted by Julian Brown in his 2002 book Minds, Machines, and the Multiverse: The Quest for the quantum computer as saying,

“Round about fifty qubits is where you begin to beat classical computers. What that means is that with custom hardware tuned for computation with spectroscopy, you could just begin to graze the point where classical computers run out.”

Yes this was over a decade ago, but petabyte-scale computing was on the horizon then. Note that the interest in this question is quite reasonable, even though fifty qubits are way too few to implement any interesting quantum algorithm, certainly with the overhead of current fault-tolerant coding. The thought goes that fifty qubits may, however, be sufficient to do something interesting. Perhaps they can solve some physical question about a real system? A very interesting question. Let’s turn to discuss this question and scale in more detail.

The Fifty Bit Problem

The challenge is to figure out how we can simulate on a classical computer a quantum computation that uses at most fifty qubits. This is not nearly enough qubits to do anything interesting for cryptography, but makes for a nice question. The obvious ways to simulate such a quantum computation is not impossible for a classical machine, but is still not a simple computation. Thus the challenge: can we do this classically? Can we do a fifty quantum qubit problem on a laptop? Or on a server? Or in the cloud?

The obvious solution is to write down the initial vector of size  and start applying the quantum gates to the vector. This immediately hits a problem, since such a vector is really large. But it is not that large. The size is “just” a petabyte—or actually 1/8 of a petabyte. The MapReduce cloud framework has already been used to carry out some basic algorithms on petabytes of data.

and start applying the quantum gates to the vector. This immediately hits a problem, since such a vector is really large. But it is not that large. The size is “just” a petabyte—or actually 1/8 of a petabyte. The MapReduce cloud framework has already been used to carry out some basic algorithms on petabytes of data.

Quantum operations are notionally sparse, each affecting only a small constant number of qubits, generally up to three. Under the standard encoding, each qubit splits the long vector into a measurement set for a  value and a set for the

value and a set for the  value, each of size

value, each of size  . However, under different encoding schemes the operations could be local. In particular, many important quantum states, before and after some common quantum circuit operations, are succinct. There is scope for doing simulations analogous to what happens with homomorphic encryption, whereby operators might work directly on the succinct functional representations.

. However, under different encoding schemes the operations could be local. In particular, many important quantum states, before and after some common quantum circuit operations, are succinct. There is scope for doing simulations analogous to what happens with homomorphic encryption, whereby operators might work directly on the succinct functional representations.

It strikes us that simulating a fifty-qubit algorithm classically is a concrete large-data kind of problem, one that may be interesting for its own sake. This stands apart from recent work on general “de-quantization” of circuits and algorithms, for which Maarten van den Nest’s ArXiV page is a good place to start.

Open Problems

What is really going on with the information structures that are acted on by quantum computers? That they are  -long bit-vectors in any sense we have come to doubt. Is there a better general way to represent them?

-long bit-vectors in any sense we have come to doubt. Is there a better general way to represent them?

Even with the  -vector representation, for

-vector representation, for  qubits, can we make classical simulations concretely efficient?

qubits, can we make classical simulations concretely efficient?

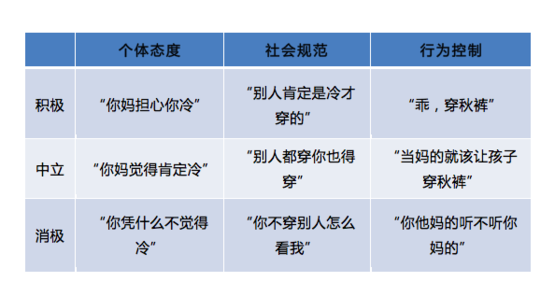

如果你觉得文章太长,请随时记得看两眼这个九宫格,非常实用,解决生活中很多问题,果壳网诸君亲测有效。

如果你觉得文章太长,请随时记得看两眼这个九宫格,非常实用,解决生活中很多问题,果壳网诸君亲测有效。 过个年容易么!图片来源:epochtimes.com

过个年容易么!图片来源:epochtimes.com 咦?大爷你说啥?图片来源:youtube.com

咦?大爷你说啥?图片来源:youtube.com 你不结婚你想干嘛?图片来源:enet.com.cn

你不结婚你想干嘛?图片来源:enet.com.cn