Shared posts

Japan's H3 Rocket Explodes. It's a Win for SpaceX and Even Mitsubishi.

Researchers Have Successfully Grown Electrodes In Living Tissue

Read more of this story at Slashdot.

Wind Turbine Giant Develops Solution To Keep Blades Out of Landfills

Read more of this story at Slashdot.

How To Use Microsoft PowerToys to Improve Productivity

Microsoft PowerToys is a free download for Windows that adds features for power users that are not included in Windows 10 or Windows 11 by default. In this article, I’ll show you my favorite PowerToys tools and why once you start using them, you won’t be able to live without them.

What is Microsoft PowerToys?

Microsoft PowerToys is a free set of tools for Windows 10 and Windows 11. The tools are designed to improve your workflows in Windows for increased productivity. You can download PowerToys from GitHub. Alternatively, PowerToys can also be installed using the Microsoft Store.

What tools are included in PowerToys?

Currently, PowerToys includes 17 tools:

| Always On Top Awake Color Picker FancyZones File Locksmith File Explorer add-ons Hosts File Editor Image Resizer Keyboard Manager Mouse utilities |

PowerRename PowerToys Run Quick Accent Screen Ruler Shortcut Guide Text Extractor Video Conference Mute |

Most of the tools are self-explanatory from their name. But others require a little explanation.

FancyZones

FancyZones is useful for those with large displays. It’s a window manager the lets you customize layouts for arranging and snapping windows, and to restore your layouts quickly.

Awake

Awake lets you override the selected power plan settings in Windows to make sure your PC doesn’t go to sleep while you’re performing an important task.

File Locksmith

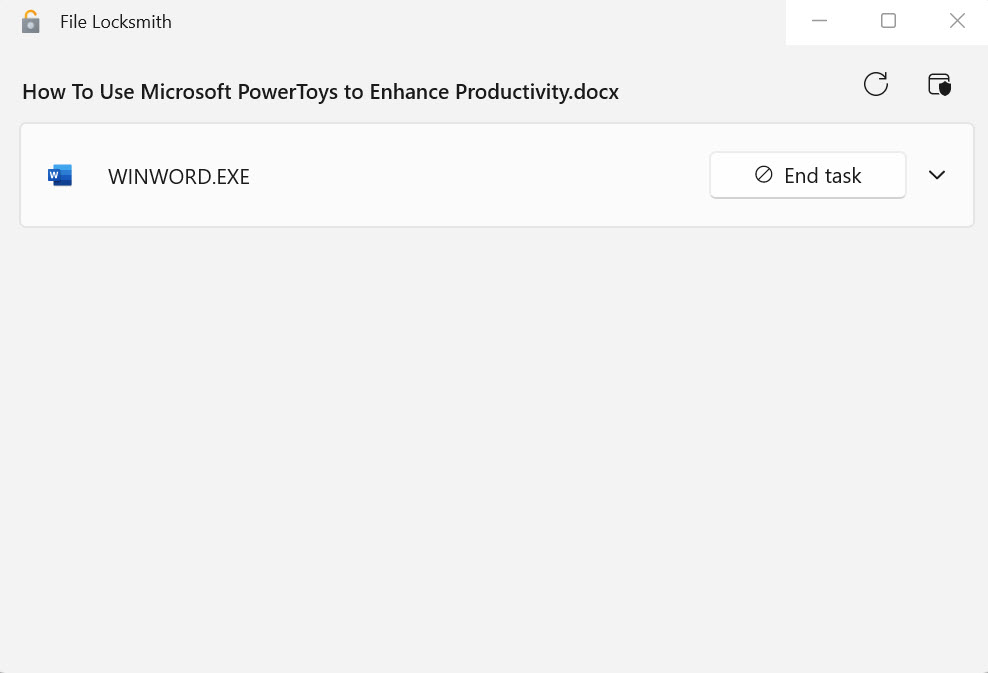

File Locksmith lets you check which files are currently in use, and by which processes, through a Windows shell extension.

Hosts File Editor

If you don’t know what the hosts file is, you probably don’t need to read about this tool further. But it is a tool that lets you easily and quickly edit the hosts file, which contains a list IP addresses and matching domain names used for DNS resolution.

Quick Accent

An accent is a small mark above a letter that usually indicates which vowel should be stressed when the word is pronounced. If you don’t have a keyboard that supports stressed characters, you can use this tool instead.

PowerToys Run

PowerToys Run is launcher for power users that lets you search for apps, files, folders, running processes, and perform a whole load of other tasks including:

- Execute system commands

- Open webpages or perform a web search

- Convert units

- Return time and date information

- Perform simple calculations

How to use Microsoft PowerToys

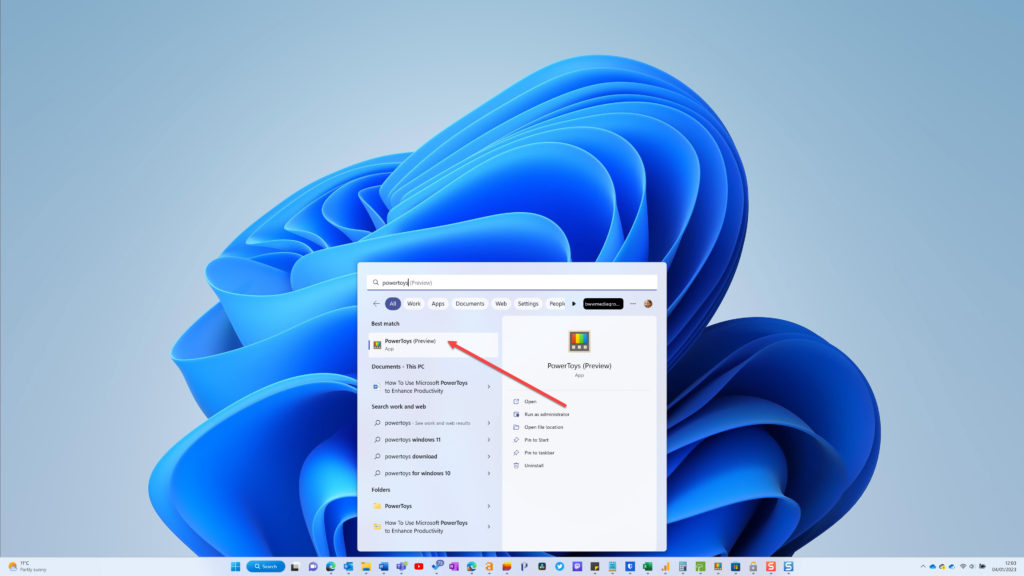

Once you have installed PowerToys on your system, you can access it by pressing the WIN key, typing PowerToys, and selecting it from the list of search results under Best match.

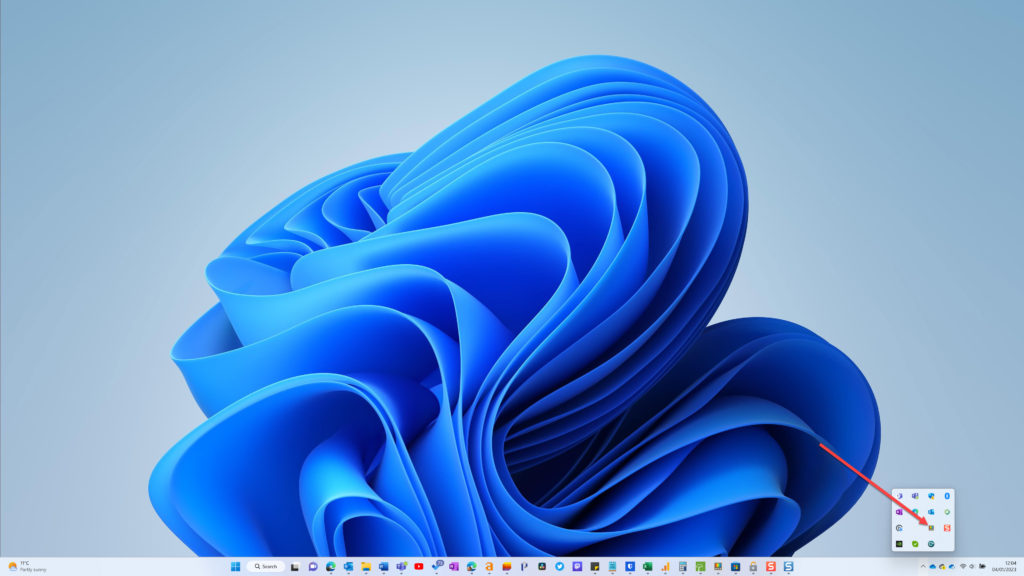

Alternatively, you should find the PowerToys icon in the system tray. Double click it to open PowerToys.

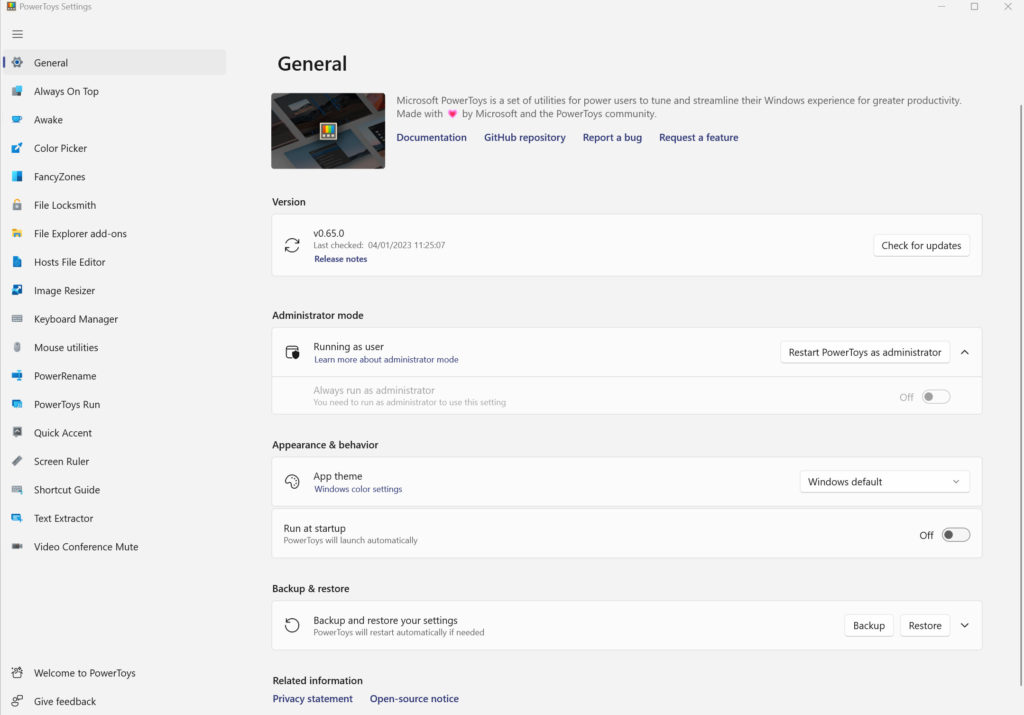

In the PowerToys app, there is a page with general settings. The general settings page lets you check for updates, run the tool as an administrator, and change the Windows Theme used by PowerToys.

There are also settings pages for each PowerToys tool. Some tools are turned on by default. Others you will need to manually enable. All the tools can be enabled or disabled as you prefer.

Most of the tools are associated with a keyboard shortcut that can be used to launch them. You can either accept the default shortcut or assign your own.

I’m going to explain a little more about how to use my favorite PowerToys tools and why I find them useful.

PowerToys Run

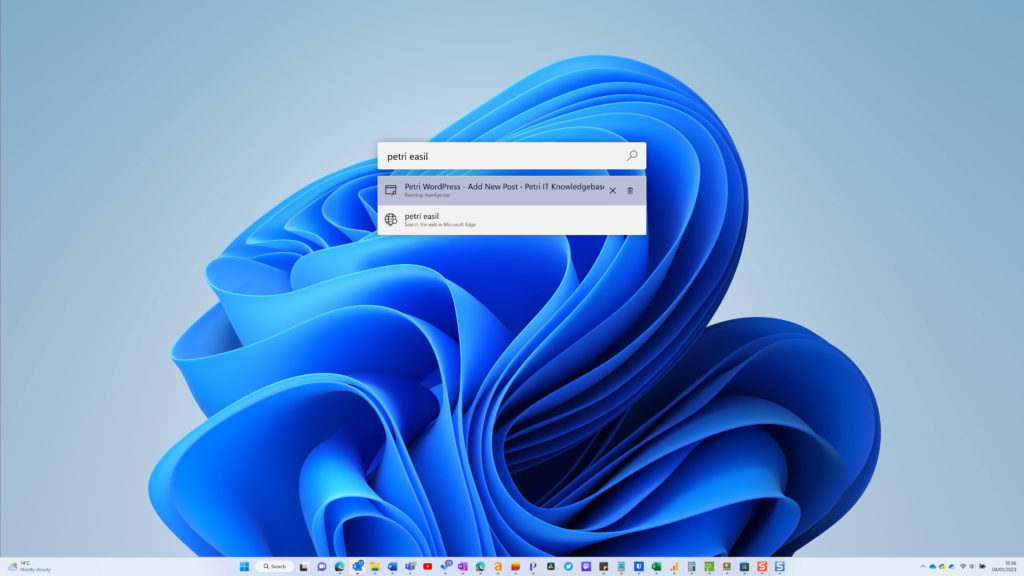

Windows doesn’t have a built-in application launcher like Alfred in macOS. So, if you’re coming from a mac, you’ll find this especially useful. One of the main frustrations I have with Windows is quickly finding and switching to open browser tabs. Very often, I’ll have many tabs open across different browser windows and even different browser profiles – I have one profile for work and another for everything else.

While Google Chrome and Microsoft Edge both have a built-in feature for searching open tabs (CTRL+SHIFT+A), it doesn’t work cross profile. For example, if I need to find a tab that’s open on my work profile but I’m searching from my personal browser profile, CTRL+SHIFT+A doesn’t help much.

PowerToys, using a feature that was previously called Windows Walker, can however search open tabs regardless of which instance of the browser they are running in.

For example, I know I have a tab open called ‘Keyword Research Prototype’, but I’ve no idea where it’s running. All I need to do is type the name of the webpage I’m looking for into PowerShell Run, which I activate by pressing ALT + SPACE, up it comes and I can switch straight to the open browser tab.

As you can see from the search results, you can also use it to perform a web search or search OneNote Notes, and much more. But the ability to search open browser tabs is my favorite use of Run and it saves a lot of time when I have many tabs open.

The search experience for open browser tabs in PowerToys Run isn’t perfect but more often than not, it will allow me to quickly find what I’m looking for. If you only have a single browser profile in Google Chrome or Microsoft Edge, then the built-in tab search is probably your best bet.

Awake

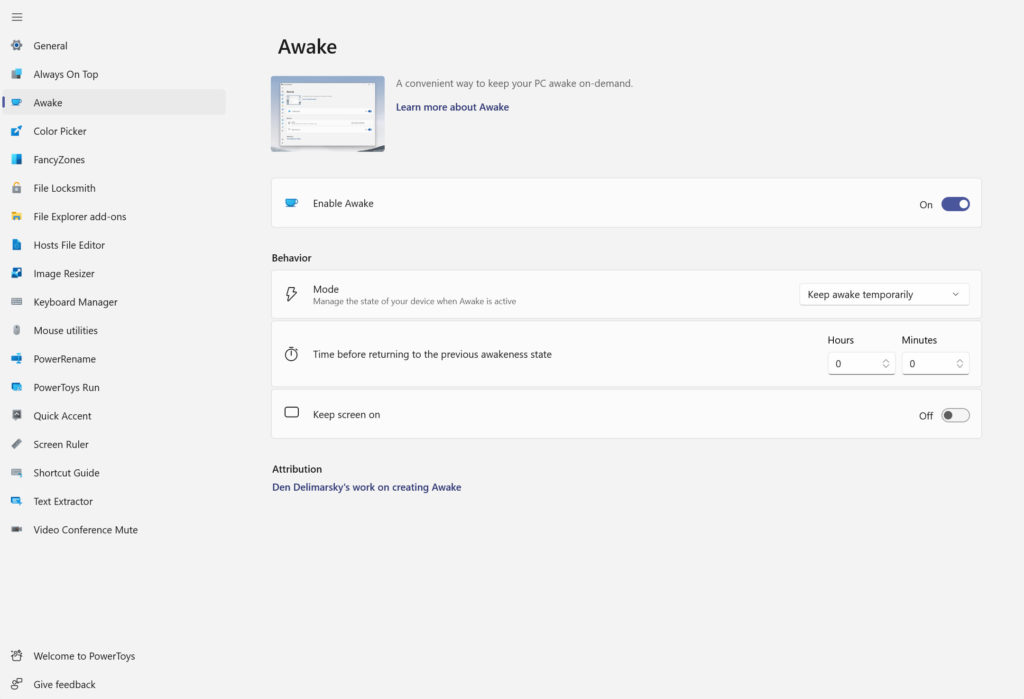

Awake lets you prevent Windows from sleeping without having to change the power settings. In many cases, applications block Windows sleeping if an important task is in progress, like during a Teams meeting or when you are presenting a PowerPoint deck.

But there are situations where you might want to manually prevent sleep. For instance, when I’m using a video editor I want to prevent my PC from going to sleep. The editor doesn’t handle resuming from sleep well.

Awake allows me to block sleep mode quickly and easily by simply enabling the tool in the PowerToys app and then selecting either Keep awake indefinitely or Keep awake temporarily and setting a time.

Color Picker

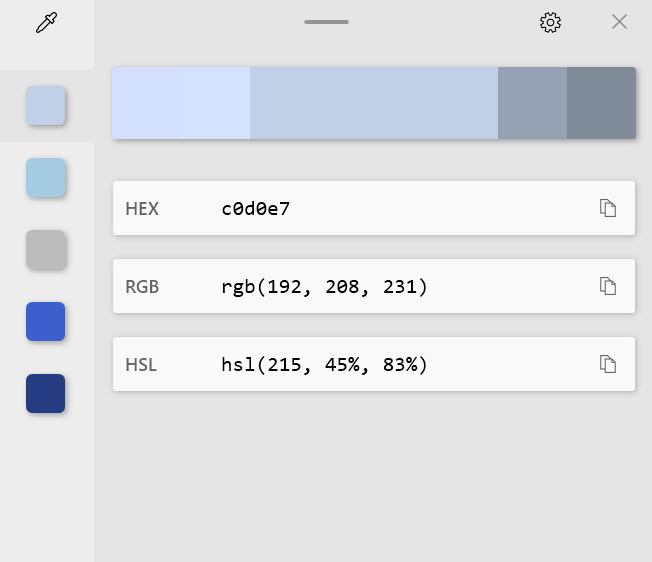

If you ever need to do design work, you may need to match colors or copy a color to make sure you keep a new design on brand. Having the ability to understand the hex, RGB, or HSL code for a color can speed up your process.

Color Picker allows you to sample a graphic, or any part of the screen, and then have the color returned in one of the formats I mentioned above. The tool is activated using a keyboard shortcut that you can define. By default the shortcut is WIN+SHIFT+C.

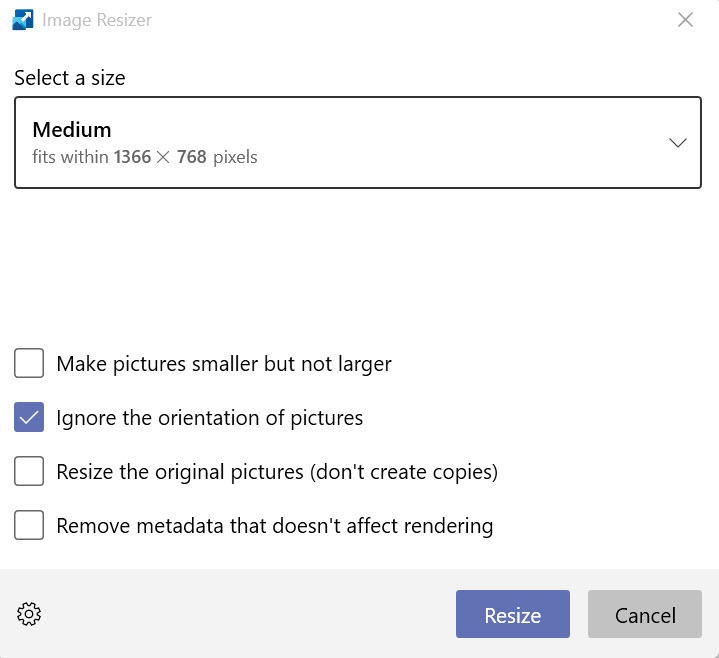

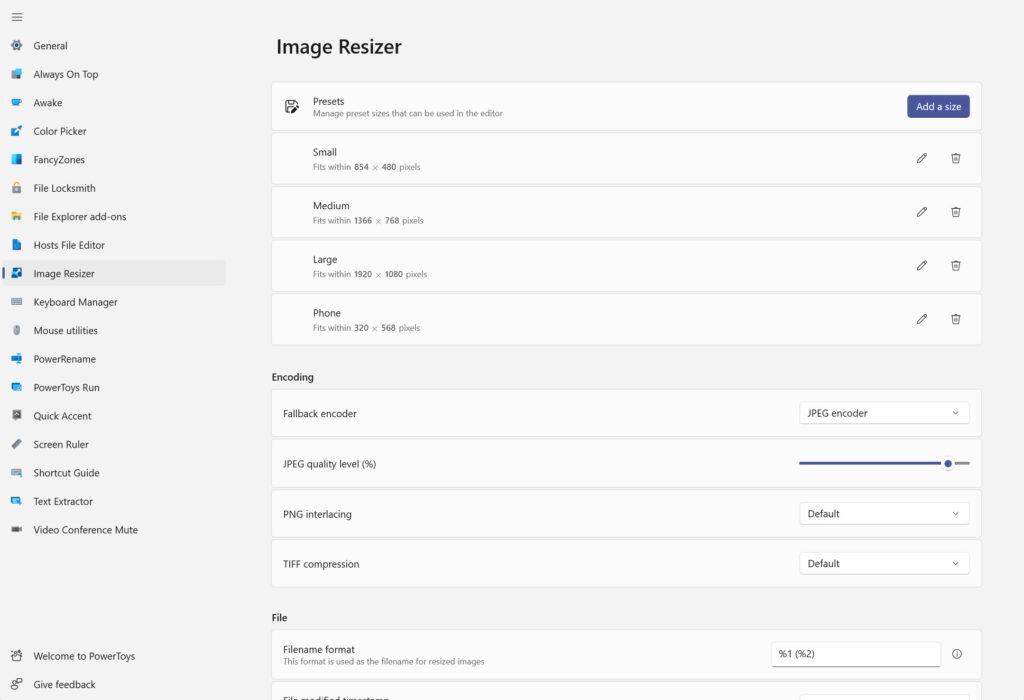

Image Resizer

Occasionally, I need to quickly resize an image, either because the dimensions are wrong or it is too big to upload. Image Resizer is built into Explorer and it allows you to quickly resize an image by right clicking the file in File Explorer and then selecting Resize picture.

You then choose from a list of predefined sizes and click OK. You can define your own size options in the PowerToys app and configure the encoding quality. It’s faster and simpler than opening your image editor.

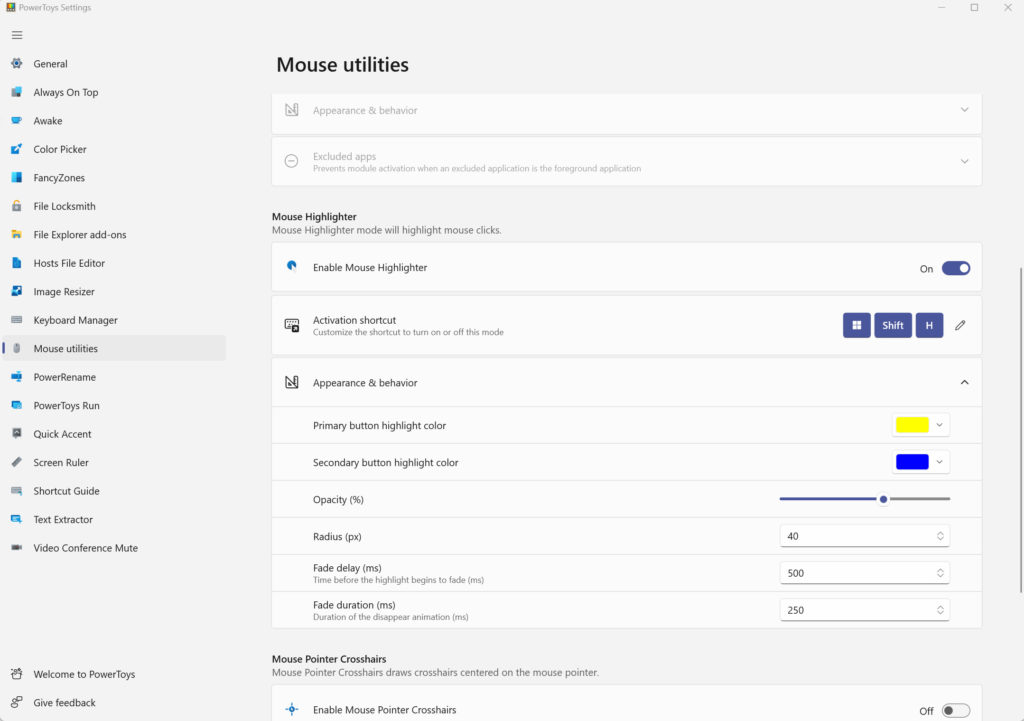

Mouse Utilities

Last but not least, I use the Mouse Highlighter tool that’s part of Mouse utilities. It’s useful to highlight the mouse when recording tutorial videos because it helps guide the viewer’s eye as you move the pointer around the screen.

The default activation keyboard shortcut is WIN+SHIFT+H. You have to left click the mouse to get the highlighter to appear.

PowerToys has a tool for everyone

PowerToys contains a host of useful tools. I’ve just covered a few of my favorite in this article. But depending on how you use your PC, these or other tools might prove to be useful. Let me know in the comments below if you use PowerToys and what you think of the toolset.

A completely free Unreal Tournament 3 just leaked on Steam

Epic just closed some doors, but it may be opening a very attractive window indeed. Today, it appears to have inadvertently announced a new completely free version of the excellent Unreal Tournament 3 that you’ll be able to crossplay with friends across Steam, the Epic Games Store, and even GOG (via Wario64).

It’s called Unreal Tournament 3 X, and according to its Steam page, there’s no catch — “No microtransactions and no strings attached. This is the fully-featured, award-winning first-person shooter you fell in love with... completely free.”

And, assuming the listing is accurate, the game...

Obligatory ChatGPT Post

Seems like absolutely everyone everywhere is playing with Chat GPT.

So I did, too….

Write an essay in the style of Bruce Schneier on how ChatGPT will affect cybersecurity.

As with any new technology, the development and deployment of ChatGPT is likely to have a significant impact on the field of cybersecurity. In many ways, ChatGPT and other AI technologies hold great promise for improving the ability of organizations and individuals to defend against cyber threats. At the same time, however, the use of these technologies also raises important questions and concerns about the potential risks and challenges they may pose.

One of the key ways in which ChatGPT is likely to affect cybersecurity is by enabling organizations to more quickly and accurately identify potential security threats. With its ability to analyze vast amounts of data and identify patterns and trends that might be difficult for humans to spot, ChatGPT could be a valuable tool for detecting and responding to cyberattacks. This could be especially useful in the case of complex, coordinated attacks that might otherwise be difficult to identify and defend against.

Another potential benefit of ChatGPT in the realm of cybersecurity is its ability to improve communication and coordination among different organizations and agencies. By enabling these groups to more easily share information and work together to defend against cyber threats, ChatGPT could help to make the overall cybersecurity landscape more effective and efficient. This could be especially important in the case of large-scale attacks that require a coordinated response from multiple organizations.

Of course, as with any new technology, there are also potential risks and challenges associated with the use of ChatGPT in the field of cybersecurity. One key concern is the potential for ChatGPT to be used by malicious actors to conduct cyberattacks. By automating the process of planning and executing attacks, ChatGPT could make it easier for attackers to carry out sophisticated, coordinated attacks at a much faster pace than would be possible without AI. This could potentially make it more difficult for organizations and individuals to defend against such attacks.

Another potential risk is the possibility that ChatGPT could be used to create fake news or propaganda, which could be used to spread misinformation or confusion. In the context of cybersecurity, the spread of false information could potentially lead to confusion and panic, making it more difficult for organizations and individuals to respond effectively to cyber threats. Additionally, the use of ChatGPT to create fake news or propaganda could also undermine trust in information and communications systems, potentially undermining the overall security of these systems.

Overall, the development and deployment of ChatGPT is likely to have a significant impact on the field of cybersecurity. While ChatGPT and other AI technologies hold great promise for improving the ability of organizations and individuals to defend against cyber threats, it is important to carefully consider the potential risks and challenges they may pose, and to take appropriate measures to mitigate these risks. This may include implementing strict security protocols and safeguards to prevent unauthorized access to ChatGPT and other AI systems, as well as ongoing monitoring and evaluation to ensure that these technologies are being used in a safe and responsible manner.

Not bad. I don’t think I can retire, but I probably can go on vacation for a few days. And, of course, it’s going to get better…a lot better…and probably sooner than we expect. This is a big deal.

Apple Is Finally Encrypting iCloud Backups

After way too many years, Apple is finally encrypting iCloud backups:

Based on a screenshot from Apple, these categories are covered when you flip on Advanced Data Protection: device backups, messages backups, iCloud Drive, Notes, Photos, Reminders, Safari bookmarks, Siri Shortcuts, Voice Memos, and Wallet Passes. Apple says the only “major” categories not covered by Advanced Data Protection are iCloud Mail, Contacts, and Calendar because “of the need to interoperate with the global email, contacts, and calendar systems,” according to its press release.

You can see the full list of data categories and what is protected under standard data protection, which is the default for your account, and Advanced Data Protection on Apple’s website.

With standard data protection, Apple holds the encryption keys for things that aren’t end-to-end encrypted, which means the company can help you recover that data if needed. Data that’s end-to-end encrypted can only be encrypted on “your trusted devices where you’re signed in with your Apple ID,” according to Apple, meaning that the company—or law enforcement or hackers—cannot access your data from Apple’s databases.

Note that this system doesn’t have the backdoor that was in Apple’s previous proposal, the one put there under the guise of detecting CSAM.

Apple says that it will roll out worldwide by the end of next year. I wonder how China will react to this.

In Fusion Breakthrough, US Scientists Reportedly Produce Reaction With Net Energy Gain

Read more of this story at Slashdot.

The Decoupling Principle

This is a really interesting paper that discusses what the authors call the Decoupling Principle:

The idea is simple, yet previously not clearly articulated: to ensure privacy, information should be divided architecturally and institutionally such that each entity has only the information they need to perform their relevant function. Architectural decoupling entails splitting functionality for different fundamental actions in a system, such as decoupling authentication (proving who is allowed to use the network) from connectivity (establishing session state for communicating). Institutional decoupling entails splitting what information remains between non-colluding entities, such as distinct companies or network operators, or between a user and network peers. This decoupling makes service providers individually breach-proof, as they each have little or no sensitive data that can be lost to hackers. Put simply, the Decoupling Principle suggests always separating who you are from what you do.

Lots of interesting details in the paper.

Gut Bacteria Are Linked To Depression

Read more of this story at Slashdot.

PSA: Do Not Use Services That Hate The Internet

|

mkalus

shared this story

from |

As you look around for a new social media platform, I implore you, only use one that is a part of the World Wide Web.

As you look around for a new social media platform, I implore you, only use one that is a part of the World Wide Web. tl;dr avoid Hive and Post.

If posts in a social media app do not have URLs that can be linked to and viewed in an unauthenticated browser, or if there is no way to make a new post from a browser, then that program is not a part of the World Wide Web in any meaningful way.

Consign that app to oblivion.

Most social media services want to lock you in. They love their walled gardens and they think that so long as they tightly control their users and make it hard for them to escape, they will rule the world forever.

This was the business model of Compuserve. And AOL. And then a little thing called The Internet got popular for a minute in the mid 1990s, and that plan suddenly didn't work out so well for those captains of industry.

The thing that makes the Internet useful is interoperability. These companies hate that. The thing that makes the Internet become more useful is the open source notion that there will always be more smart people who don't work for your company than that do, and some of those people will find ways to expand on your work in ways you never anticipated. These companies hate that, too. They'd rather you have nothing than that you have something they don't own.

Instagram started this trend: they didn't even have a web site until 2012. It was phone-app-onlly. They were dragged kicking and screaming onto the World Wide Web by, ironically, Facebook, who bought them to eliminate them as competition.

Hive Social is exactly this app-only experience. Do not use Hive. Anyone letting that app -- or anything like it -- get its hooks into them is making a familiar and terrible mistake. We've been here before. Don't let it happen again.

So many people, who should know better, blogging about their switch to Hive on the basis of user experience or some other vacuous crap, and not fundamentals like, "Is this monetized, and if not yet, when how and who?" or "who runs this?" or "is it sane to choose another set of castle walls to live as a peasant within?"

Post Dot News also seems absolutely vile.

First of all, Marc Andreessen is an investor, and there is no redder red flag than that. "How much more red? None. None more red", as Spinal Tap would say. He's a right wing reactionary whose idea of "free speech" is in line with Musk, Trump, Thiel and the rest of the Klept.

Second, it appears to be focused on "micropayments", which these days means "cryptocurrency Ponzi schemes", another of marca's favorite grifts.

They call themselves "a platform for real people, civil conversations". So, Real Names Policy and tone policing by rich white dudes is how I translate that. But hey, at least their TOS says they won't discriminate against billionaires:

life, liberty, and the pursuit of happiness, regardless of their gender, religion, ethnicity, race, sexual orientation, net worth, or beliefs.

Mastodon is kind of a mess right now, and maybe it will not turn out to be what you or I are looking for. But to its credit, interoperability is at its core, rather than being something that the VCs will just take away when it no longer serves their growth or onboarding projections.

There is a long history of these data silos (and very specifically Facebook, Google and Twitter) being interoperable, federating, providing APIs and allowing others to build alternate interfaces -- until they don't. They keep up that charade while they are small and growing, and drop it as soon as they think they can get away with it, locking you inside.

Incidentally, and tangentially relatedly, Signal is not a messaging program but rather is a sketchy-as-fuck growth-at-any-cost social network. Fuck Signal too.

Previously, previously, previously, previously, previously, previously, previously, previously.

Apple Hobbled Protesters' Tool in China Weeks Before Widespread Protests

Read more of this story at Slashdot.

Europe’s security is at stake in Moldova

Maia Sandu is the president of the Republic of Moldova.

Three decades ago, Moldovans chose freedom and democracy over authoritarianism. And today, we’re moving decisively toward the European Union.

But with Russia’s brutal aggression against our neighbor Ukraine, our country now faces dramatic costs and heightened risks threatening to derail our chosen path, weakening Europe’s security.

Moldova is a dynamic democracy in what has become a dangerous neighborhood.

Over the past year, we’ve been building stronger institutions, fighting corruption and supporting the post-pandemic recovery. As a result, our economy grew by 14 percent in 2021; we jumped 49 ranks on the Reporters Without Borders Press Freedom Index; and our anti-money laundering rating was upgraded by the Council of Europe.

In recognition of our implementation of difficult reforms in a challenging geopolitical context, the EU granted Moldova candidate status for membership this June. But instead of enjoying the benefits of deeper European integration, Moldovans are now struggling to cope with an acute energy crisis, a severe economic downturn and massive security threats.

Many, if not all, European nations are currently facing serious energy pressures, of course — but ours are existential. The legacy of almost full dependence on Russia for gas and electricity, and the failure by successive governments to diversify supplies, is now threatening our economic survival.

As of November, Russia’s bombing of Ukraine’s energy infrastructure, alongside Gazprom halving natural gas exports, has eliminated power from our previous sources of imported electricity.

In response, the government has adopted energy austerity measures and switched some industries to alternative fuels. Longer-term energy security measures, including an electricity interconnection with Romania, will yield results but only in only a few years.

Friends and partners are also providing support to the best of their abilities.

Romania, our good neighbor and strong supporter, has stepped in, with electricity exports now accounting for about 80 percent of Moldova’s current consumption. Meanwhile, on a recent visit to Chișinău, European Commission President Ursula von der Leyen announced fresh assistance to help ameliorate Moldova’s crisis, and the European Bank for Reconstruction and Development has thrown a lifeline to finance emergency gas supplies.

Today, French President Emmanuel Macron is hosting a meeting of the Moldova Support Platform — an initiative led by France, Germany and Romania.

The forum aims to mobilize much-needed support ahead of what is likely to be a precarious winter. Moldova is looking to finance its gas and electricity purchases from new sources and assist social schemes for the most vulnerable, which will help cushion price increases. Over the past 12 months, the price of gas in our country grew seven times over, while the price of electricity saw a four-fold increase. This winter, Moldovans are likely to spend up to 65 percent of their income on energy bills.

If we can light and heat homes in our country and make sure that schools and hospitals can still operate and the economy’s wheels keep turning, this would mean that Moldovans — alongside Ukrainians — need not seek refuge elsewhere in Europe during this upcoming cold season.

Even before winter fully sets in, the energy crisis and the economic fallout from the war next door are already having a significant impact on people’s lives, the country’s economy and our future growth. Inflation is approaching 35 percent; prices have skyrocketed; trade routes are disrupted; and investor sentiment has weakened. As a result, the economy is likely to contract.

Meanwhile, Russia’s proxies and criminal groups have joined forces to exploit the energy crisis and fuel discontent. They hope to foment political turmoil. Using the entire spectrum of hybrid threats — including fake bomb alerts, cyberattacks, disinformation, calls for social unrest and unconcealed bribery — they are working to destabilize the government, erode our democracy and jeopardize Moldova’s contribution to Europe’s wider security.

Our vulnerabilities could weaken Ukraine’s resilience, as well as stability on the rest of the continent.

While we are Ukraine’s most vulnerable neighbor, we also secure its second-longest border — after the one it has with Russia. Across these 1,222 kilometers, Moldova is a frontline state in the fight against weapons, drugs and human trafficking.

Since the beginning of the war, we’ve worked hard to maintain stability in the breakaway Transnistrian region, which shares a border over 450 kilometers long with Ukraine and where 1,600 Russian troops are stationed illegally. We’ve managed to keep the situation calm.

We also provide essential supply routes to and from Ukraine — a significant part of Ukrainian trade, including grains, go through Moldova.

Additionally, our country has sheltered more than 650,000 refugees since the first days of the Russian invasion. So far, over 80,000 of them have chosen to stay, and we’re preparing to host more in the winter, should they need to flee a military escalation or lack of heat, electricity and water.

Europe and Ukraine need a strong Moldova. Strong enough to support Ukraine during the war. Strong enough to maintain peace and stability in our region. Strong enough to shelter refugees. And strong enough to become a natural hub for the reconstruction of southern Ukraine after the war.

Just as Russia mustn’t be allowed to win in Ukraine, its hybrid techniques mustn’t be allowed to succeed in Moldova. We will do our part to defend European values despite the privations imposed on us. The price is heavy, and we are prepared to carry the burden.

But we can’t do this alone.

Another Event-Related Spyware App

Last month, we were warned not to install Qatar’s World Cup app because it was spyware. This month, it’s Egypt’s COP27 Summit app:

The app is being promoted as a tool to help attendees navigate the event. But it risks giving the Egyptian government permission to read users’ emails and messages. Even messages shared via encrypted services like WhatsApp are vulnerable, according to POLITICO’s technical review of the application, and two of the outside experts.

The app also provides Egypt’s Ministry of Communications and Information Technology, which created it, with other so-called backdoor privileges, or the ability to scan people’s devices.

On smartphones running Google’s Android software, it has permission to potentially listen into users’ conversations via the app, even when the device is in sleep mode, according to the three experts and POLITICO’s separate analysis. It can also track people’s locations via smartphone’s built-in GPS and Wi-Fi technologies, according to two of the analysts.

A Digital Red Cross

The International Committee of the Red Cross wants some digital equivalent to the iconic red cross, to alert would-be hackers that they are accessing a medical network.

The emblem wouldn’t provide technical cybersecurity protection to hospitals, Red Cross infrastructure or other medical providers, but it would signal to hackers that a cyberattack on those protected networks during an armed conflict would violate international humanitarian law, experts say, Tilman Rodenhäuser, a legal adviser to the International Committee of the Red Cross, said at a panel discussion hosted by the organization on Thursday.

I can think of all sorts of problems with this idea and many reasons why it won’t work, but those also apply to the physical red cross on buildings, vehicles, and people’s clothing. So let’s try it.

EDITED TO ADD: Original reference.

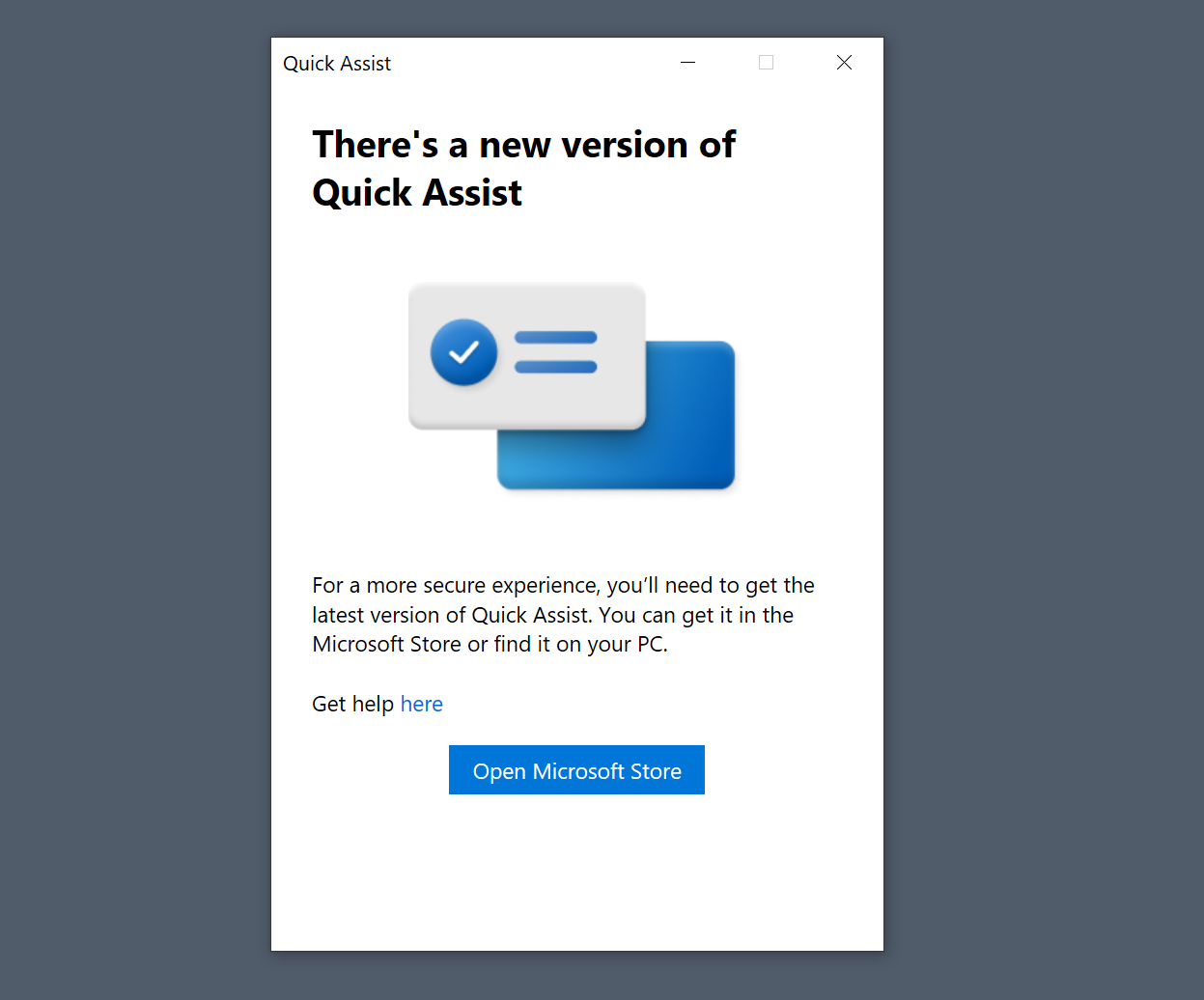

New Quick Assist app will soon be integrated into Windows

Microsoft announced the retiring of the integrated Quick Assist application of the Windows operating system in May 2022. Quick Assist is a remote assistance application, which may be used to get or give assistance. It is commonly used by technicians to provide remote assistance to an organization's workforce.

Microsoft decided to retire Quick Assist and replace it with a new Microsoft Store version. Windows users who attempt to launch Quick Assist on their devices right now get a prompt stating that there is a new version available on the Microsoft Store.

Microsoft explained back then that the new application would enable it to patch security issues and other issues more quickly in the future.

Administrators were not too happy with the change. Two main points of criticism were leveled at Microsoft. First, that the installation of the new Quick Assist required administrative privileges, and second, that one could not be sure anymore if Quick Assist was available on a user device. The latter required additional assistance to get the new version of the application on the user's device.

Microsoft released a new Windows 10 version 22H2 build and a new Windows 11 build to the Release Preview Insider channel this week; these builds add the new Microsoft Store Quick Assist application as a native app back in the operating system.

The new version will replace the classic version of Quick Assist, which can't be run anymore anyway, on the target system. Quick Assist can then be launched via the Start Menu or by using its dedicated keyboard shortcut Ctrl-Windows-Q.

Release Preview indicates that the changes will land soon in stable versions of Windows 10 and 11. Microsoft has yet to announce when this will happen though. Windows users may install the Microsoft Store application manually at any time to get access to Quick Assist right away.

You can check out the Insider Preview build announcements for Windows 10 and Windows 11 here.

Now You: have you used Quick Assist or another remote assistance tool in the past?

Thank you for being a Ghacks reader. The post New Quick Assist app will soon be integrated into Windows appeared first on gHacks Technology News.

Albert Einstein

Apple Only Commits to Patching Latest OS Version

People have suspected this for a while, but Apple has made it official. It only commits to fully patching the latest version of its OS, even though it claims to support older versions.

From ArsTechnica:

In other words, while Apple will provide security-related updates for older versions of its operating systems, only the most recent upgrades will receive updates for every security problem Apple knows about. Apple currently provides security updates to macOS 11 Big Sur and macOS 12 Monterey alongside the newly released macOS Ventura, and in the past, it has released security updates for older iOS versions for devices that can’t install the latest upgrades.

This confirms something that independent security researchers have been aware of for a while but that Apple hasn’t publicly articulated before. Intego Chief Security Analyst Joshua Long has tracked the CVEs patched by different macOS and iOS updates for years and generally found that bugs patched in the newest OS versions can go months before being patched in older (but still ostensibly “supported”) versions, when they’re patched at all.

Learned Helpfulness

Learned helplessness is an interesting idea, and Wikipedia sums up current thinking well:

Learned helplessness is the behavior exhibited by a subject after enduring repeated aversive stimuli beyond their control. It was initially thought to be caused by the subject’s acceptance of their powerlessness: discontinuing attempts to escape or avoid the aversive stimulus, even when such alternatives are unambiguously presented. Upon exhibiting such behaviour, the subject was said to have acquired learned helplessness. Over the past few decades, neuroscience has provided insight into learned helplessness and shown that the original theory had it backward: the brain’s default state is to assume that control is not present, and the presence of “helpfulness” is what is learned first. However, it is unlearned when a subject is faced with prolonged aversive stimulation.

In other words: we learn that we’ve got control, and when things go sideways, over and over again, we unlearn it.

I’ve been thinking about this a lot because I’ve been finding myself perplexingly incapable of late. I’m a smart guy with enormous privilege, financial resources, and I’ve been known to have moxie by times. And yet problems that, in theory, are solvable have been slaying me, and I’ve been grasping for reasons why.

Driving downtown this morning after dropping off Olivia, I was thinking about how this might relate to Catherine’s death and the grief surrounding it.

From her incurable cancer diagnosis in 2014 until her death in 2020 Catherine accepted her fate: she did not rail against the darkness, and accepted that she was going to die. I followed her into that, and while I generally regarded it as the right attitude, the only reasonable attitude, I’m wondering now whether that also constituted “enduring repeated aversive stimuli beyond my control.”

What is the long-term effect on the psyche from waking up every morning to be reminded of the everpresence of impending doom?

I notice my disability most when it comes to confronting gnarly problems with many interlinked aspects (aspects that often lead down blind alleys or into brick walls). These are the types of problems that I’ve always excelled at, earned my living from, and I’ve loved solving them. But not so much of late.

What describes the process of caring for someone living with incurable cancer better than “confronting gnarly problems with many interlinked aspects.”

I’ve lost my taste for the challenge; I’m exhausted by the gnarly.

I want things to be simple.

And yet they are not.

And so I need a new plan, one that lets me rebuild my sense of helpfulness. I need a way to route around the brick walls. To not get flummoxed and debilitated by a feeling of how-can-this-possibly-be-so-hard. To break down things into bite-sized chunks. To make maps of things that, at one time, I might have been able to hold in my head. To ask for help, over and over and over again. To release my attachment to completeness, perfection.

My brain has been changed by what I’ve been through, in ways I’m only just beginning to understand; it’s time that I accept that, and work to adapt.

Crypto: ‘We need to look at global regulation of crypto,’ says European Commission financial-services commissioner Mairead McGuinness

Google's 'Incognito' Mode Inspires Staff Jokes - and a Big Lawsuit

Read more of this story at Slashdot.

Lucille Ball

Attention is not a commodity

In one of his typically trenchant posts, titled Attentive, Scott Galloway (@profgalloway) compares human attention to oil, meaning an extractive commodity:

We used to refer to an information economy. But economies are defined by scarcity, not abundance (scarcity = value), and in an age of information abundance, what’s scarce? A: Attention. The scale of the world’s largest companies, the wealth of its richest people, and the power of governments are all rooted in the extraction, monetization, and custody of attention.

I have no argument with where Scott goes in the post. He’s right about all of it. My problem is with framing it inside the ad-supported platform and services industry. Outside of that industry is actual human attention, which is not a commodity at all.

There is nothing extractive in what I’m writing now, nor in your reading of it. Even the ads you see and hear in the world are not extractive. They are many things for sure: informative, distracting, annoying, interrupting, and more. But you do not experience some kind of fungible good being withdrawn from your life, even if that’s how the ad business thinks about it.

My point here is that reducing humans to beings who are only attentive—and passively so—is radically dehumanizing, and it is important to call that out. It’s the same reductionism we get with the word “consumers,” which Jerry Michalski calls “gullets with wallets and eyeballs”: creatures with infinite appetites for everything, constantly swimming upstream through a sea of “content.” (That’s another word that insults the infinite variety of goods it represents.)

None of us want our attention extracted, processed, monetized, captured, managed, controlled, held in custody, locked in, or subjected to any of the other verb forms that the advertising world uses without cringing. That the “attention economy” produces $trillions does not mean we want to be part of it, that we like it, or that we wish for it to persist, even though we participate in it.

Like the economies of slavery, farming, and ranching, the advertising economy relies on mute, passive, and choice-less participation by the sources of the commodities it sells. Scott is right when he says “You’d never say (much of) this shit to people in person.” Because shit it is.

Scott’s focus, however, is on what the big companies do, not on what people can do on their own, as free and independent participants in networked whatever—or as human beings who don’t need platforms to be social.

At this point in history it is almost impossible to think outside of platformed living. But the Internet is still as free and open as gravity, and does not require platforms to operate. And it’s still young: at most only decades old. In how we experience it today, with ubiquitous connectivity everywhere there’s a cellular data connection, it’s a few years old, tops.

The biggest part of that economy extracts personal data as a first step toward grabbing personal attention. That is the actual extractive part of the business. Tracking follows it. Extracting data and tracking people for ad purposes is the work of what we call adtech. (And it is very different from old-fashioned brand advertising, which does want attention, but doesn’t track or target you personally. I explain the difference in Separating Advertising’s Wheat and Chaff.)

In How the Personal Data Extraction Industry Ends, which I wrote in August 2017, I documented how adtech had grown in just a few years, and how I expected it would end when Europe’s GDPR became enforceable starting the next May.

As we now know, GDPR enforcement has done nothing to stop what has become a far more massive, and still growing, economy. At most, the GDPR and California’s CCPA have merely inconvenienced that economy, while also creating a second economy in compliance, one feature of which is the value-subtract of websites worsened by insincere and misleading consent notices.

So, what can we do?

The simple and difficult answer is to start making tools for individuals, and services leveraging those tools. These are tools empowering individuals with better ways to engage the world’s organizations, especially businesses. You’ll find a list of fourteen different kinds of such tools and services here. Build some of those and we’ll have an intention economy that will do far more for business than what it’s getting now from the attention economy, regardless of how much money that economy is making today.

Because We Still Have Net 1.0

That’s the flyer for the first salon in our Beyond the Web Series at the Ostrom Workshop, here at Indiana University. You can attend in person or on Zoom. Register here for that. It’s at 2 PM Eastern on Monday, September 19.

And yes, all those links are on the Web. What’s not on the Web—yet—are all the things listed here. These are things the Internet can support, because, as a World of Ends (defined and maintained by TCP/IP), it is far deeper and broader than the Web alone, no matter what version number we append to the Web.

The salon will open with an interview of yours truly by Dr. Angie Raymond, Program Director of Data Management and Information Governance at the Ostrom Workshop, and Associate Professor of Business Law and Ethics in the Kelley School of Business (among too much else to list here), and quickly move forward into a discussion. Our purpose is to introduce and talk about these ideas:

- That free customers are more valuable—to themselves, to businesses, and to the marketplace—than captive ones.

- That the Internet’s original promises of personal empowerment, peer-to-peer communication, free and open markets, and other utopian ideals, can actually happen without surveillance, algorithmic nudging, and capture by giants, all of which have all become norms in these early years of our digital world.

- That, since the admittedly utopian ambitions behind 1 and 2 require boiling oceans, it’s a good idea to try first proving them locally, in one community, guided by Ostrom’s principles for governing a commons. Which we are doing with a new project called the Byway.

This is our second Beyond the Web Salon series. The first featured David P. Reed, Ethan Zuckerman, Robin Chase, and Shoshana Zuboff. Upcoming in this series are:

- Nathan Schneider on October 17

- Roger McNamee on November 14

- Vinay Gupta on December 12

Mark your calendars for those.

And, if you’d like homework to do before Monday, here you go:

- Beyond the Web (with twelve vexing questions that cannot be answered on the Web as we know it). An earlier and longer version is here.

- The Cluetrain Manifesto, (published in 1999), and New Clues (published in 2015). Are these true yet? Why not?

- Customer Commons. Dig around. See what we’re up to there.

- A New Way, Byway, and Byway FAQ. All are at Customer Commons and are works in progress. The main thing is that we are now starting work toward actual code doing real stuff. It’s exciting, and we’d love to have your help.

- Ostrom Workshop history. Also my lecture on the 10th anniversary of Elinor Ostrom’s Nobel Prize. Here’s the video, (start at 11:17), and here’s the text.

- Privacy Manifesto. In wiki form, at ProjectVRM. Here’s the whole project’s wiki. And here’s its mailing list, active since I started the project at Harvard’s Berkman Klein Center (which kindly still hosts it) in 2006.

See you there!

Stephen Jay Gould

Tree-Planting Schemes Are Just Creating Tree Cemeteries

Read more of this story at Slashdot.

Subtracting devices

People who don’t listen to podcasts often ask people who do: “When do you find time to listen?” For me it’s always on long walks or hikes. (I do a lot of cycling too, and have thought about listening then, but wind makes that impractical and cars make it dangerous.) For many years my trusty podcast player was one or another version of the Creative Labs MuVo which, as the ad says, is “ideal for dynamic environments.”

At some point I opted for the convenience of just using my phone. Why carry an extra, single-purpose device when the multi-purpose phone can do everything? That was OK until my Quixotic attachment to Windows Phone became untenable. Not crazy about either of the alternatives, I flipped a coin and wound up with an iPhone. Which, of course, lacks a 3.5mm audio jack. So I got an adapter, but now the setup was hardly “ideal for dynamic environments.” My headset’s connection to the phone was unreliable, and I’d often have to stop walking, reseat it, and restart the podcast.

If you are gadget-minded you are now thinking: “Wireless earbuds!” But no thanks. The last thing I need in my life is more devices to keep track of, charge, and sync with other devices.

I was about to order a new MuVo, and I might still; it’s one of my favorite gadgets ever. But on a recent hike, in a remote area with nobody else around, I suddenly realized I didn’t need the headset at all. I yanked it out, stuck the phone in my pocket, and could hear perfectly well. Bonus: Nothing jammed into my ears.

It’s a bit weird when I do encounter other hikers. Should I pause the audio or not when we cross paths? So far I mostly do, but I don’t think it’s a big deal one way or another.

Adding more devices to solve a device problem amounts to doing the same thing and expecting a different result. I want to remain alert to the possibility that subtracting devices may be the right answer.

There’s a humorous coda to this story. It wasn’t just the headset that was failing to seat securely in the Lightning port. Charging cables were also becoming problematic. A friend suggested a low-tech solution: use a toothpick to pull lint out of the socket. It worked! I suppose I could now go back to using my wired headset on hikes. But I don’t think I will.

Ethanol Helps Plants Survive Drought

Read more of this story at Slashdot.

Security and Cheap Complexity

I’ve been saying that complexity is the worst enemy of security for a long time now. (Here’s me in 1999.) And it’s been true for a long time.

In 2018, Thomas Dullien of Google’s Project Zero talked about “cheap complexity.” Andrew Appel summarizes:

The anomaly of cheap complexity. For most of human history, a more complex device was more expensive to build than a simpler device. This is not the case in modern computing. It is often more cost-effective to take a very complicated device, and make it simulate simplicity, than to make a simpler device. This is because of economies of scale: complex general-purpose CPUs are cheap. On the other hand, custom-designed, simpler, application-specific devices, which could in principle be much more secure, are very expensive.

This is driven by two fundamental principles in computing: Universal computation, meaning that any computer can simulate any other; and Moore’s law, predicting that each year the number of transistors on a chip will grow exponentially. ARM Cortex-M0 CPUs cost pennies, though they are more powerful than some supercomputers of the 20th century.

The same is true in the software layers. A (huge and complex) general-purpose operating system is free, but a simpler, custom-designed, perhaps more secure OS would be very expensive to build. Or as Dullien asks, “How did this research code someone wrote in two weeks 20 years ago end up in a billion devices?”

This is correct. Today, it’s easier to build complex systems than it is to build simple ones. As recently as twenty years ago, if you wanted to build a refrigerator you would create custom refrigerator controller hardware and embedded software. Today, you just grab some standard microcontroller off the shelf and write a software application for it. And that microcontroller already comes with an IP stack, a microphone, a video port, Bluetooth, and a whole lot more. And since those features are there, engineers use them.

The 6 best Nvidia GPUs of all time

Nvidia sets the standard so high for its gaming graphics cards that it’s actually hard to tell the difference between an Nvidia GPU that’s merely a winner and an Nvidia GPU that’s really special.

Contents

- GeForce 256

- GeForce 8800 GTX

- GeForce GTX 680

- GeForce GTX 980

- GeForce GTX 1080

- GeForce RTX 3080

- So what’s next?Show 2 more items

Nvidia has long been the dominant player in the graphics card market, but the company has from time to time been put under serious pressure by its main rival AMD, which has launched several of its own iconic GPUs. Those only set Nvidia up for a major comeback, however, and sometimes that led to a real game-changing card.

It was hard to choose which Nvidia GPUs were truly worthy of being called the best of all time, but I’ve narrowed down the list to six cards that were truly important and made history.

GeForce 256

The very first

VGA Museum

Although Nvidia often claims the GeForce 256 was the world’s first GPU, that’s only true if Nvidia is the only company that gets to define what a GPU is. Before GeForce there were the RIVA series of graphics cards, and there were other companies making their own competing graphics cards then, too. What Nvidia really invented was the marketing of graphics cards as GPUs, because in 1999 when the 256 came out, terms like graphics card and graphics chipset were more common.

Nvidia is right that the 256 was important, however. Before the 256, the CPU played a very important role in rendering graphics, to the point where the CPU was directly completing steps in rendering a 3D environment. However, CPUs were not very efficient at doing this, which is where the 256 came in with hardware transforming and lighting, offloading the two most CPU intensive parts of rendering onto the GPU. This is one of the primary reasons why Nvidia claims this is the first GPU.

As a product, the GeForce 256 wasn’t exactly legendary: Anandtech wasn’t super impressed by its price for the performance at the time of its release. Part of the problem was the 256’s memory, which was single data rate, or SDR. Due to other advances, SDR was becoming insufficient for GPUs of this performance level. A faster dual data rate or DDR (the same DDR as in DDR5) launched just before the end of 1999, which finally met Anandtech’s expectations for performance, but the increased price tag of the DDR version was hard to swallow.

The GeForce 256, first of its name, is certainly historical, but not because it was an amazing product. The 256 is important because it inaugurated the modern era of GPUs. The graphics card market wasn’t always a duopoly; back in the 90s, there were multiple companies competing against each other, with Nvidia being just one of them. Soon after the GeForce 256 launched, most of Nvidia’s rivals exited the market. 3dfx’s Voodoo 5 GPUs were uncompetitive and before it went bankrupt many of its technologies were bought by Nvidia; Matrox simply quit gaming GPUs altogether to focus on professional graphics.

By the end of 2000, the only other graphics company in town was ATI. When AMD acquired ATI in 2006, it brought about the modern Nvidia and AMD rivalry we all know today.

GeForce 8800 GTX

A monumental leap forward

VGA Museum

After the GeForce 256, Nvidia and ATI attempted to best the other with newer GPUs with higher performance. In 2002, however, ATI threw down the gauntlet by launching its Radeon 9000 series, and at a die size of 200mm squared, the flagship Radeon 9800 XT was easily the largest GPU ever. Nvidia’s flagship GeForce4 Ti 4600 at 100mm had no hope of beating even the midrange 9700 Pro, which inflicted a crushing defeat on Nvidia. Making a GPU was no longer just about the architecture, the memory, or the drivers; in order to win, Nvidia would need to make big GPUs like ATI.

For the next four years, the size of flagship GPUs continued to increase, and by 2005 both companies had launched a GPU that was around 300mm. Although Nvidia had regained the upper hand during this time, ATI was never far behind and its Radeon X1000 series was fairly competitive. A GPU sized at 300mm was far from the limit of what Nvidia could do, however. In 2006 Nvidia released its GeForce 8 series, led by the flagship 8800 GTX. Its GPU, codenamed G80, was nearly 500mm and its transistor count was almost three times higher than the last GeForce flagship.

The 8800 GTX did to ATI what the Radeon 9700 Pro and the rest of the 9000 series did to Nvidia, with Anandtech describing the moment as “9700 Pro-like.” A single 8800 GTX was almost twice as fast as ATI’s top-end X1950 XTX, not to mention much more efficient. At $599, the 8800 GTX was more expensive than its predecessors, but its high level of performance and DirectX 10 support made up for it.

But this was mostly the end of the big GPU arms race that had characterized the early 2000s for two main reasons. Firstly, 500mm was getting pretty close to the limit of how large a GPU could be, and even today 500mm is relatively big for a processor. Even if Nvidia wanted to, making a bigger GPU just wasn’t feasible. Secondly, ATI wasn’t working on its own 500mm GPU anyway, so Nvidia wasn’t in a rush to get an even bigger GPU to market. Nvidia had basically won the arms race by outspending ATI.

That year also saw the acquisition of ATI by AMD, which was finalized just before the 8800 GTX launched. Although ATI now had the backing of AMD, it really seemed like Nvidia had such a massive lead that Radeon wouldn’t challenge GeForce for a long time, perhaps never again.

GeForce GTX 680

Beating AMD at its own game

Nvidia

Nvidia’s next landmark release came in 2008 when it launched the GTX 200 series, starting with the GTX 280 and GTX 260. At nearly 600mm squared the 280 was a worthy monstrous successor to the 8800 GTX. Meanwhile, AMD and ATI signaled that they would no longer be launching high-end GPUs with big dies in order to compete, rather focusing on making smaller GPUs in a gambit known as the small die strategy. In its review, Anandtech said “Nvidia will be left all alone with top performance for the foreseeable future.” As it turned out, the next four years were pretty rough for Nvidia.

Starting with the HD 4000 series in 2008, AMD assaulted Nvidia with small GPUs that had high value and almost flagship levels of performance, and that dynamic was maintained throughout the next few generations. Nvidia’s GTX 280 wasn’t cost effective enough, then the GTX 400 series was delayed, and the 500 series was too hot and power hungry.

One of Nvidia’s traditional weaknesses was its disadvantage when it came to process, the way processors are manufactured. Nvidia was usually behind AMD, but it had finally caught up by using the 40nm node for the 400 series. AMD, however, wanted to regain the process lead quickly and decided its next generation would be on the new 28nm node, and Nvidia decided to follow suit.

AMD won the race to 28nm with its HD 7000 series, with its flagship HD 7970 putting AMD back in first place for performance. However, the GTX 680 launched just two months later, and not only did it beat the 7970 in performance, but also power efficiency and even die size. As Anandtech put it, Nvidia had “landed the technical trifecta” and that completely flipped the tables on AMD. AMD did reclaim the performance crown yet again by launching the HD 7970 GHz Edition later in 2012 (notable for being the first 1GHz GPU), but having the lead in efficiency and performance per millimeter was a good sign for Nvidia.

The back and forth battle between Nvidia and AMD was pretty exciting after how disappointing the GTX 400 and 500 series had been, and while the 680 wasn’t an 8800 GTX, it signaled Nvidia’s return to being truly competitive against AMD. Perhaps most importantly, Nvidia was no longer weighed down by its traditional process disadvantage, and that would eventually pay off in a big way.

GeForce GTX 980

Nvidia’s dominance begins

Bill Roberson/Digital Trends

Nvidia found itself in a very good spot with the GTX 600 series, and it was because of TSMC’s 28nm process. Under normal circumstances, AMD would have simply gone to TSMC’s next process in order to regain its traditional advantage, but this was no longer an option. TSMC and all other foundries in the world (except for Intel) had an extraordinary amount of difficulty progressing beyond the 28nm node. New technologies were needed in order to progress further, which meant Nvidia didn’t have to worry about AMD regaining the process lead any time soon.

Following a few years of back and forth and AMD floundering with limited funds, Nvidia launched the GTX 900 series in 2014, inaugurated by the GTX 980. Based on the new Maxwell architecture, it was an incredible improvement over the GTX 600 and 700 series despite being on the same node. The 980 was between 30% and 40% faster than the 780 while consuming less power, and it was even a smidge faster than the top-end 780 Ti. Of course, the 980 also beat the R9 290X, once again landing the trifecta of performance, power efficiency, and die size. In its review, Anandtech said the 980 came “very, very close to doing to the Radeon 290X what the GTX 680 did to Radeon HD 7970.”

AMD was incapable of responding. It didn’t have a next-generation GPU ready to launch in 2014. In fact, AMD wasn’t even working on a complete lineup of brand-new GPUs to even the score with Nvidia. AMD instead was planning on rebranding the Radeon 200 series as the Radeon 300 series, and would develop one new GPU to serve as the flagship. All of these GPUs were to launch in mid 2015, giving the entire GPU market to Nvidia for nearly a full year. Of course, Nvidia wanted to pull the rug right from under AMD and prepared a brand-new flagship.

Launching in mid 2015, the GTX 980 Ti was about 30% faster than the GTX 980, thanks to its significantly higher power consumption and larger die size at just over 600mm squared. It beat AMD’s brand-new R9 Fury X a month before it even launched. Although the Fury X wasn’t bad, it had lower performance than the 980 Ti, higher power consumption, and much less VRAM. It was a demonstration of how far ahead Nvidia was with the 900 series; while AMD was hastily trying to get the Fury X out the door, Nvidia could have launched the 980 Ti any time it wanted.

Anandtech put it pretty well: “The fact that they get so close only to be outmaneuvered by Nvidia once again makes the current situation all the more painful; it’s one thing to lose to Nvidia by feet, but to lose by inches only reminds you of just how close they got, how they almost upset Nvidia.”

Nvidia was basically a year ahead of AMD technologically, and while what they had done with the GTX 900 series was impressive, it was also a bit depressing. People wanted to see Nvidia and AMD duke it out like they had done in 2012 and 2013, but it started to look like that was all in the past. Nvidia’s next GPU would certainly reaffirm that feeling.

GeForce GTX 1080

The GPU with no competition but itself

Nvidia

In 2015, TSMC had finally completed the 16nm process, which could achieve 40% higher clock speeds than 28nm at the same power or half the power of 28nm at similar clock speeds. However, Nvidia planned to move to 16nm in 2016 when the node was more mature. Meanwhile, AMD had absolutely no plans to utilize TSMC’s 16nm but instead moved to launch new GPUs and CPUs on GlobalFoundries’s 14nm process. But don’t be fooled by the names: TSMC’s 16nm was and is better than GlobalFoundries’s 14nm. After 28nm, nomenclature for processes became based in marketing rather than scientific measurements. This meant that for the first time in modern GPU history, Nvidia had the process advantage against AMD.

The GTX 10-series launched in mid-2016, based on the new Pascal architecture and TSMC’s 16nm node. Pascal wasn’t actually very different from Maxwell, but the jump from 28nm to 16nm was massive, like Intel going from 14nm on Skylake to 10nm on Alder Lake. The GTX 1080 was the new flagship, and it’s hard to overstate how fast it was. The GTX 980 was a little faster than the GTX 780 Ti when it came out. By contrast, the GTX 1080 was over 30% faster than the GTX 980 Ti, and for $50 less, too. The die size of the 1080 was also extremely impressive, at just over 300mm squared, nearly half the size of the 980 Ti.

With the 1080 and the rest of the 10-series lineup, Nvidia effectively took the entire desktop GPU market for itself. AMD’s 300 series and the Fury X were simply no match. At the midrange, AMD launched the RX 400 series, but these were just three low to mid-range GPUs that were a throwback to the small die strategy, minus the part where Nvidia’s flagship was in striking distance like with the GTX 280 and the HD 4870. In fact, the 1080 was nearly twice as fast as the RX 480. The only GPU AMD could really beat was the mid-range GTX 1060, as the slightly cut down GTX 1070 was just a little too fast to lose to the Fury X.

AMD did eventually launch new high-end GPUs in the form of RX Vega, a full year after the 1080 came out. With much higher power consumption and the same selling price, the flagship RX Vega 64 beat the GTX 1080 by a hair but wasn’t very competitive. However, the GTX 1080 was no longer Nvidia’s flagship; with relatively small die size and a full year to prepare, Nvidia launched a brand-new flagship a whole three months before RX Vega even launched; it was a repeat of the 980 Ti. The new GTX 1080 Ti was even faster than the GTX 1080, delivering yet another 30% improvement to performance. As Anandtech put it, the 1080 Ti “further solidifie[d] Nvidia’s dominance of the high-end video card market.”

AMD’s failure to deliver a truly competitive high-end GPU meant that the 1080’s only real competition was Nvidia’s own GTX 1080 Ti. With the 1080 and the 1080 Ti, Nvidia achieved what is perhaps the most complete victory we’ve seen so far in modern GPU history. Over the past 4 years, Nvidia kept increasing its technological advantage over AMD, and it was hard to see how Nvidia could ever lose.

GeForce RTX 3080

Correcting course

After such a long and incredible streak of wins, perhaps it was inevitable that Nvidia would succumb to hubris and lose sight of what made Nvidia’s great GPUs so great. Nvidia did not follow up the GTX 10 series with yet another GPU with a stunning increase in performance, but with the infamous RTX 20 series. Perhaps in a move to cut AMD out of the GPU market, Nvidia focused on introducing hardware accelerated ray tracing and A.I. upscaling instead of delivering better performance in general. If successful, Nvidia could make AMD GPUs irrelevant until the company finally made Radeon GPUs with built-in ray tracing.

RTX 20-series was a bit of a flop. When the RTX 2080 and 2080 Ti launched in late 2018, there weren’t even any games that supported ray tracing or deep learning super sampling (DLSS). But Nvidia priced RTX 20-series cards as if those features made all the difference. At $699, the 2080 had a nonsensical price, and the 2080 Ti’s $1,199 price tag was even more insane. Nvidia wasn’t even competing with itself anymore.

The performance improvement in existing titles was extremely disappointing, too; the RTX 2080 was only 11% faster than the GTX 1080, though at least the RTX 2080 Ti was around 30% faster than the GTX 1080 Ti.

The next two years were a course correction for Nvidia. The threat from AMD was starting to become pretty serious; the company had finally regained the process advantage by moving to TSMC’s 7nm and the company launched the RX 5700 XT in mid-2019. Nvidia was able to head it off once again by launching new GPUs, this time the RTX 20 Super series with a focus on value, but the 5700 XT must have worried Nvidia. The RTX 2080 Ti was three times as large yet was only 50% faster, meaning AMD was achieving much higher performance per millimeter. If AMD made a larger GPU, it could be difficult to beat.

Both Nvidia and AMD planned for a big showdown in 2020. Nvidia recognized AMD’s potential and pulled out all the stops: the new 8nm process from Samsung, the new Ampere architecture, and an emphasis on big GPUs. AMD meanwhile stayed on TSMC’s 7nm process but introduced the new RDNA 2 architecture and would also be launching a big GPU, its first since RX Vega in 2017. The last time both companies launched brand-new flagships within the same year was 2013, nearly a decade ago. Though the pandemic threatened to ruin the plans of both companies, neither company was willing to delay the next generation and launched as planned.

Nvidia fired first with the RTX 30-series, led by the flagship RTX 3090, but most of the focus was on the RTX 3080 since at $699 it was far more affordable than the $1,499 3090. Instead of being a repeat of the RTX 20-series, the 3080 delivered a sizeable 30% bump in performance at 4K over the RTX 2080 Ti, though the power consumption was a little high. At lower resolutions, the performance gain of the 3080 was somewhat less, but since the 3080 was very capable at 4K, it was easy to overlook this. The 3080 also benefitted from a wider variety of games supporting ray tracing and DLSS, giving value to having an Nvidia GPU with those features.

Of course, this wouldn’t matter if the 3080 and the rest of the RTX 30-series couldn’t stand up to AMD’s new RX 6000 series, which launched two months later. At $649, the RX 6800 XT was AMD’s answer to the RTX 3080. With nearly identical performance in most games and at most resolutions, the battle between the 3080 and the 6800 XT was reminiscent of the GTX 680 and the HD 7970. Each company had its advantages and disadvantages, with AMD leading in power efficiency and performance while Nvidia had better performance in ray tracing and support for other features like A.I. upscaling.

The excitement over a new episode in the GPU war quickly died out though, because it quickly came apparent that nobody could buy RTX 30 or RX 6000 or even any GPUs at all. The pandemic had seriously reduced supply while crypto increased demand and scalpers snatched up as many GPUs as they could. At the time of writing, the shortage has mostly ended, but most Nvidia GPUs are still selling for usually $100 or more over MSRP. Thankfully, higher-end GPUs like the RTX 3080 can be found closer to MSRP than lower-end 30 series cards, which keeps the 3080 a viable option.

On the whole, the RTX 3080 was a much-needed correction from Nvidia. Although the 3080 has marked the end of Nvidia’s near total domination of the desktop GPU market, it’s hard not to give the company credit for not losing to AMD. After all, the RX 6000 series is on a much better process and AMD has been extremely aggressive these past few years. And besides, it’s good to finally see a close race between Nvidia and AMD where both sides are trying really hard to win.

So what’s next?

Unlike AMD, Nvidia always keeps its cards close to its chest and rarely ever reveals information on upcoming products. We can be pretty confident the upcoming RTX 40 series will launch sometime this year, but everything else is uncertain. One of the more interesting rumors is that Nvidia will utilize TSMC’s 5nm for RTX 40 GPUs, and if this is true then that means Nvidia will have parity with AMD once again.

But I think that as long as RTX 40 isn’t another RTX 20 and provides more low-end and mid-range options than RTX 30, Nvidia should have a good enough product next generation. I would really like for it to be so good that it makes the list of best Nvidia GPUs of all time, but we’ll have to wait and see.

The post The 6 best Nvidia GPUs of all time appeared first on AIVAnet.